Applications of Neural Networks to Music Generation

By Anna Mikhailova

Note: this page is incomplete.

Music generation, alternatively termed algorithmic composition, or music synthesis, is the process of generating music via an algorithm (i.e. some formal set of rules). Algorithmic music composition has been a part of the human experience for millennia – transcending cultures and continents. It ranges from any music that is generated without human interference – e.g. wind chimes – to music generated by intelligent systems. The application of neural networks to music generation poses particular interest due to the similarity between music composition and natural language structuring. A similar challenge to that of language is that any given instance of “music” can contain more than just one music unit (i.e. a note). It can consist of several notes together (a chord) and/or other qualities such as volume, much like language. The creation of “convincing” music, or music that sounds like it could be naturally produced by a human, is riddled with challenges. Although not all algorithmic music has aimed to produce human-like creations, that goal has been at the forefront of ongoing research.

Contents

A Brief History

In the absence of modern technology (pre-1700), the precursor to what we nowadays refer to as algorithmic music was automatic music – music arising from naturally, or automatically, occurring processes. Automatic music generation has allegedly been a human obsession since biblical times. Legend has it that King David hung a lyre above his bed to catch the wind at night, and thus produce music[1]. These wind instruments, known as Aeolian harps[1] have been found throughout history in China, Ethiopia, Greece, Indonesia, India, and Melanesia[1]. Music generated by the wind exists to this day, in the form of wind chimes, which first appeared in Ancient Rome, and later on in the 2nd century CE in India and China [3]. Their Japanese counterpart, the fūrin, has been in use since the Edo Period (1603-1867) [4]. The Japanese also had the Suikinkutsu [5], a water instrument, also originating in the middle of the Edo Period.

The transition from automatic to algorithmic music commenced in the late 18th century, with the creation of the Musikalisches Würfelspiel [6], a game that used dice to randomly generate music from pre-composed options. The game can as such be viewed as a table with each roll of the dice corresponding to a particular pre-composed segment of music. This game, indeed, is analogous to very basic sentence construction – randomly picking components of a sentence, one at a time, from a series of available options. This type of algorithmic construction persisted until the formalization of Markov Chains in the early 20th century.

Markov Models

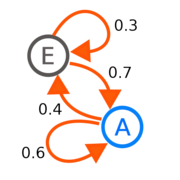

The formalization of the Markov Model [7] shifted the landscape of algorithmic music. With the help of Markov Models, the dice game was extended one step further. Markov Models are built on Markov Chains [8], statistical models of systems that move in between states with the probability of each possible “next” state being dependent solely on the current state of the system. Similar to how the Musicalisches Würfelspiel could be represented via a table, a Markov Chain is encoded via a transitions matrix which contains the probabilities of transitioning between any two possible states within the system. Since Markov Models encode variation in probabilities, they can be trained – the transition matrices can be calculated, and adjusted – based on existing data, as opposed to needing explicitly composed fragments [2].

In 1958, Iannis Xenakis [9], a Greek-French composer, used a Markov Model to generate two algorithmic pieces of music – Analogique A and Analogique B [10]. Xenakis details his process and the mathematics behind his work in Formalized Music: Thought and Mathematics in Composition [3]. Shortly after, in 1981, David Cope [11], Dickerson Emeritus Professor at the University of California at Santa Cruz, built on Xenakis’s methodology and combined Markov Chains with musical grammars and combinatorics, creating Emmy (Experiments in Musical Intelligence) [12] [2] – a semi-intelligent analysis system that is quite adept at imitating human composers [4].

The most popular application of Markov Chains is speech recognition (in conjunction with the Viterbi Algorithm). The basic structure of a natural language processing system, and for our purposes, a music system that is built on a Markov Chain is as follows [5] [6]:

- Take a set (the larger, generally the better) of music data, i.e. compositions.

- Calculate the log probability of the transition between every two possible pairs of chords. This is accomplished by computing the number of transitions from state A to state B and normalizing this frequency by the total number of transitions out of state A. Note: the transition from state A to state B is independent of the transition from state B to state A.

- Define a starting state – a first chord. This chord can be randomly generated, or pre-determined.

- Taking into account the probabilities of transitioning to each possible next state from the current state, randomly pick the next state.

- Repeat step 4 until the desired length of the composition is reached.

This GitHub code [13] demonstrates this algorithm in practice.

However, although they are intuitive, facile to build, and go a step further than the algorithmic music of the 18th and 19th centuries, Markov Chains are limited in that they can only produce sequences that exist in the original data set(s) that the model was trained on [7].

[[File: RNN0.png |right|thumb|upright=1.2| Subtitle]

Recurrent Neural Network Cells (RNNs)

Long Short-Term Memory Cells (LSTMs)

Ongoing Projects – MuseNet

References

- ↑ 1.0 1.1 1.2 Britannica, T. Editors of Encyclopaedia (2019, October 11). Aeolian harp. Encyclopedia Britannica. https://www.britannica.com/art/Aeolian-harp [1]

- ↑ 2.0 2.1 McDonald, K. (2018, November 13). Neural nets for generating music. Medium. Retrieved November 17, 2022, from https://medium.com/artists-and-machine-intelligence/neural-nets-for-generating-music-f46dffac21c0

- ↑ Xenakis, I., & Kanach, S. (1992). Formalized music: Thought and mathematics in Composition. Pendragon Press. retrieved from https://monoskop.org/images/7/74/Xenakis_Iannis_Formalized_Music_Thought_and_Mathematics_in_Composition.pdf

- ↑ Garcia, C. (2019, September 25). Algorithmic music – David Cope and EMI. CHM. Retrieved November 18, 2022, from https://computerhistory.org/blog/algorithmic-music-david-cope-and-emi/

- ↑ Osipenko, A. (2019, July 7). Markov Chain for Music Generation. Towards Data Science. Retrieved November 18, 2022, from https://towardsdatascience.com/markov-chain-for-music-generation-932ea8a88305

- ↑ Quattrini Li, A., & Pierson, T. (n.d.). PS-5. CS 10: Problem Solving via Object-Oriented Programming. Retrieved November 18, 2022, from https://www.cs.dartmouth.edu/~cs10/schedule.html

- ↑ Goldberg, Y. (n.d.). The unreasonable effectiveness of Character-level Language Models [web log]. Retrieved November 18, 2022, from https://nbviewer.org/gist/yoavg/d76121dfde2618422139.