Long Short-Term Memory

By Alphonso Bradham

Note: This page is currently a work in progress

Long Short-Term Memory (LSTM) refers to a type of recurrent neural network architecture useful for performing classification, regression, encoding, and decoding tasks on long sequence or time-series data. LSTMs were developed to counter the [vanishing gradient problem], and the key features of an LSTM network are the inclusion of LSTM "cell state" vectors that allow them to keep track of long range relationships in data that other models would "forget".

Contents

Background on Sequence Data and RNNs

For data where sequence order is important, traditional feed-forward neural networks often struggle to encode the temporal relationships between inputs. While suitable for simple regression and classification tasks on independent samples of data, the simple matrix-vector architecture of feed-forward neural networks does not retain a capacity for "memory" and is unable to accurately handle sequence data (where the output of the network on data point t is dependent on the value of a previously examined data point t-1).

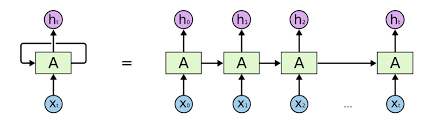

It is in these situations (where a sequential memory is desired) that Recurrent Neural Networks (RNNs) are employed. Put simply, recurrent neural networks differ from traditional feed-forward neural networks in that neurons have connections that point "backwards" to reference earlier observations. Operationally, this translates to RNNs having two inputs at each layer, one that references only the input data at the current time "t" in the traditional manner of feed-forward neural networks, and another that references the network's output at the previous time step "t-1". In this way, the input representing the previous time-step "t-1" serves as the network's "memory" (since the previous output itself also depended on the outputs of the time-steps before it, and so on to the beginning of the sequence). It is this capacity for memory (encoded in RNNs' performance rule) that makes RNNs well suited for handling sequence data tasks.

Vanishing Gradient Problem

As mentioned above, although RNNs' performance rule endows them with a capacity for memory, their learning is still defined by the back-propagation learning rule present in feed-forward neural networks. One of the side effects of the back-propagation algorithm is its tendency to "diminish" the amount that early weights are tuned as the network grows in depth. Because weights are updated proportionally to the derivative of the error function, and because these error-function derivatives become increasingly small as training converges towards local minima, early layers of a network (which are updated last in back-propagation) receive incredibly tiny adjustments in weight values.

Put more simply: the deeper the network, the smaller the changes earlier layers are able to undergo. This becomes a particularly troublesome problem in the case of RNNs, since, by nature, they require vastly deep architectures to facilitate their capacity for contextual "memory". Especially in cases where "long range contextualization and memory" is desired, the vanishing gradient problem can severely hinder the ability for an RNNs to accurately model and interpret sequence data. Avoiding the penalty of vanishing gradients in RNNs is the primary motivation underpinning LSTM models.

LSTM Architecture

To combat the vanishing gradient problem, LSTM models implement