Difference between revisions of "Applications of Neural Networks to Music Generation"

(→Markov Models) |

(→Markov Models) |

||

| Line 13: | Line 13: | ||

== Markov Models == | == Markov Models == | ||

| − | The formalization of the Markov Model [https://en.wikipedia.org/wiki/Markov_model] shifted the landscape of algorithmic music. With the help of Markov Models, the dice game was extended one step further. Markov Models are built on Markov Chains [https://en.wikipedia.org/wiki/Markov_chain], statistical models of systems that move in between states with the probability of each possible “next” state being dependent solely on the current state of the system. Similar to how the ''Musicalisches Würfelspiel'' could be represented via a table, a Markov Chain is encoded via a transitions matrix which contains the probabilities of transitioning between any two possible states within the system. Since Markov Models encode variation in probabilities, they can be trained – the transition matrices can be calculated, and adjusted – based on existing data, as opposed to needing explicitly composed fragments <ref name= "medium"> McDonald, K. (2018, November 13). Neural nets for generating music. Medium. Retrieved November 17, 2022, from | + | The formalization of the Markov Model [https://en.wikipedia.org/wiki/Markov_model] shifted the landscape of algorithmic music. With the help of Markov Models, the dice game was extended one step further. Markov Models are built on Markov Chains [https://en.wikipedia.org/wiki/Markov_chain], statistical models of systems that move in between states with the probability of each possible “next” state being dependent solely on the current state of the system. Similar to how the ''Musicalisches Würfelspiel'' could be represented via a table, a Markov Chain is encoded via a transitions matrix which contains the probabilities of transitioning between any two possible states within the system. Since Markov Models encode variation in probabilities, they can be trained – the transition matrices can be calculated, and adjusted – based on existing data, as opposed to needing explicitly composed fragments <ref name= "medium"> McDonald, K. (2018, November 13). Neural nets for generating music. Medium. Retrieved November 17, 2022, from https://medium.com/artists-and-machine-intelligence/neural-nets-for-generating-music-f46dffac21c0</ref>. |

== Recurrent Neural Network Cells (RNNs) == | == Recurrent Neural Network Cells (RNNs) == | ||

Revision as of 23:39, 21 October 2022

By Anna Mikhailova

Note: this page is incomplete.

Music generation, alternatively termed algorithmic composition, or music synthesis, is the process of generating music via an algorithm (i.e. some formal set of rules). Algorithmic music composition has been a part of the human experience for millennia – transcending cultures and continents. It ranges from any music that is generated without human interference – e.g. wind chimes – to music generated by intelligent systems. The application of neural networks to music generation poses particular interest due to the similarity between music composition and natural language structuring. A similar challenge to that of language is that any given instance of “music” can contain more than just one music unit (i.e. a note). It can consist of several notes together (a chord) and/or other qualities such as volume, much like language. The creation of “convincing” music, or music that sounds like it could be naturally produced by a human, is riddled with challenges. Although not all algorithmic music has aimed to produce human-like creations, that goal has been at the forefront of ongoing research.

Contents

A Brief History

In the absence of modern technology (pre-1700), the precursor to what we nowadays refer to as algorithmic music was automatic music – music arising from naturally, or automatically, occurring processes. Automatic music generation has allegedly been a human obsession since biblical times. Legend has it that King David hung a lyre above his bed to catch the wind at night, and thus produce music[1]. These wind instruments, known as Aeolian harps[1] have been found throughout history in China, Ethiopia, Greece, Indonesia, India, and Melanesia[1]. Music generated by the wind exists to this day, in the form of wind chimes, which first appeared in Ancient Rome, and later on in the 2nd century CE in India and China [3]. Their Japanese counterpart, the fūrin, has been in use since the Edo Period (1603-1867) [4]. The Japanese also had the Suikinkutsu [5], a water instrument, also originating in the middle of the Edo Period.

The transition from automatic to algorithmic music commenced in the late 18th century, with the creation of the Musikalisches Würfelspiel [6], a game that used dice to randomly generate music from pre-composed options. The game can as such be viewed as a table with each roll of the dice corresponding to a particular pre-composed segment of music. This game, indeed, is analogous to very basic sentence construction – randomly picking components of a sentence, one at a time, from a series of available options. This type of algorithmic construction persisted until the formalization of Markov Chains in the early 20th century.

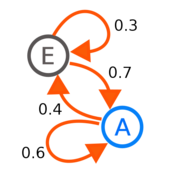

Markov Models

The formalization of the Markov Model [7] shifted the landscape of algorithmic music. With the help of Markov Models, the dice game was extended one step further. Markov Models are built on Markov Chains [8], statistical models of systems that move in between states with the probability of each possible “next” state being dependent solely on the current state of the system. Similar to how the Musicalisches Würfelspiel could be represented via a table, a Markov Chain is encoded via a transitions matrix which contains the probabilities of transitioning between any two possible states within the system. Since Markov Models encode variation in probabilities, they can be trained – the transition matrices can be calculated, and adjusted – based on existing data, as opposed to needing explicitly composed fragments [2].

Recurrent Neural Network Cells (RNNs)

Long Short-Term Memory Cells (LSTMs)

Ongoing Projects – MuseNet

References

- ↑ 1.0 1.1 1.2 Britannica, T. Editors of Encyclopaedia (2019, October 11). Aeolian harp. Encyclopedia Britannica. https://www.britannica.com/art/Aeolian-harp [1]

- ↑ McDonald, K. (2018, November 13). Neural nets for generating music. Medium. Retrieved November 17, 2022, from https://medium.com/artists-and-machine-intelligence/neural-nets-for-generating-music-f46dffac21c0