Smartphone Facial Recognition

By Kenneth Wu

Note: This page is incomplete.

Facial recognition systems are computer programs that match faces against a database [1]. A trivial task for humans, achieving high levels of accuracy has been difficult for computers until recently.[1] Deep learning [2] through the use of convolutional neural networks [3] currently dominates the facial recognition field.[2] However, deep learning uses much more memory, disk storage, and computational resources than traditional computer vision, presenting significant challenges to facial recognition with the limited hardware capabilities of smartphones.[3] Accordingly, smartphone manufacturers have taken to using processors with dedicated neural engines for deep learning tasks [4] as well as creating simpler and more compact models that mimic the behavior of more complex models.[3]

Contents

Model

Facial recognition systems accomplish their tasks by detecting the presence of a face, analyzing its features, and confirming the identity of the person.[5] Training data is fed into a facial detection algorithm, where the two most popular such methods are the Viola-Jones algorithm and the use of convolutional neural networks.[6]

Viola-Jones Algorithm

The Viola-Jones algorithm was the first real-time object detection framework, and works by converting images to grayscale and looking for edges that signify the presence of human features. [7] While highly accurate in detecting well-lit front-facing faces and also requiring relatively little memory, it is slower than deep-learning based methods, including the now industry-standard convolutional neural network (CNNs).[6]

Convolutional Neural Networks

Convolutional neural networks are closely related to artificial neural networks (ANNs) [4]. Unlike traditional ANNs, CNNs have three dimensions - width, depth, and height - and only connect to a certain subset of the preceding layer.[8] Their architecture is sparse [5], topographic, and feed-forward [6][9] featuring an input and output layer along with three types of hidden layers.[10] The first hidden layer type is convolutional, which involves using a filter of n x n size with pre-determined values, sweeping across a larger matrix at a pre-determined stride and adding the dot products to an map.[8] This presents a significant advantage over ANNs by greatly reducing the amount of information stored [8]. Because convolution only uses matrix multiplication, another process is needed to introduce non-linearity; the most popular is the Rectified Linear-Unit (RELU), which replaces negative values with zero. [10] The next hidden layer type is pooling, which works to further reduce the size and required computational power.[11] The most common pooling method involves sweeping over the activation layer with another layer, usually 2 x 2 with a stride of 2, and selecting the largest value to put onto the next activation layer.[11] The final hidden layer is the fully-connected layer, where neurons are fully connected to their two adjacent layers, as in an ANN.[8] CNNs are usually configured in one of two ways: the first stacks convolutional layers which then pass to a stack of pooling layers; the second alternates between two stacks of convolutional layers and a stack of pooling layers.[8]

Deep CNNs are the go-to method for supervised training and are even capable of unsupervised classification given a large enough training data set.[12] Training results in learned weights, which are data patterns or rules extracted from the provided images.[13] The trained filter values help determine the visual features of an input image, which it can compare to its existing database for a match.[13] Once trained, models can be retrained to include faces not included in the original training image set in a process known as transfer learning.[14] Through this process, weights for feature extraction - finding the features in an image - are retained, while weights for classification are changed.[14] In this way, smartphones can learn new faces after they have already been trained.

Optimizations for Mobile Devices

Several advances have made it possible for smartphones to perform facial recognition locally, without the use of the cloud as has traditionally been done for tasks involving neural networks.[3] Several options exist for improving the performance of on-device neural networks, both from a software and hardware perspective.

Software

Software methods typically trade a small amount of accuracy for large performance boosts.

Weight Pruning

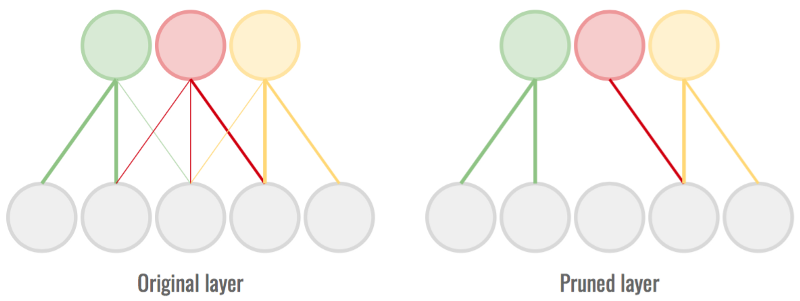

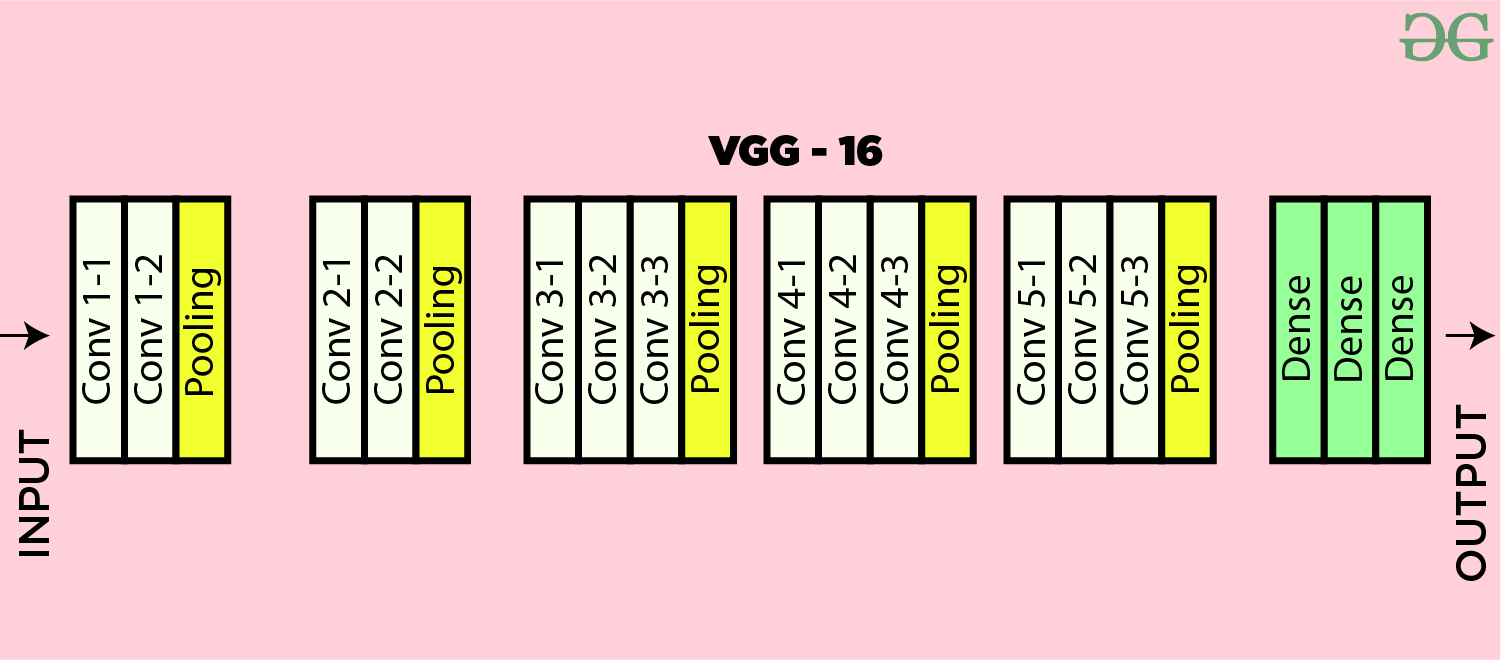

A trained neural network has weights that make minimal impact to the activation of a neuron but still require computational power.[15] Pruning removes several of the connections with the lowest weight, which speeds up the calculations while having a minimal impact on accuracy.[15] One such pruning technique has been experimentally shown to allow for real-time smartphone neural net computation of the largest data set (ImageNet) using the largest deep neural network (VGG-16).[15]

Weight Quantizing

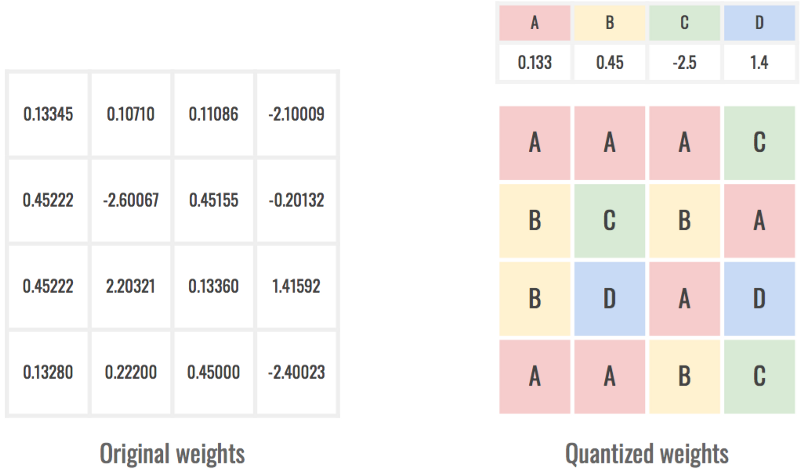

Values of network weights are typically stored in floating-point form on disks, which in C take up four bytes, or thirty-two bits.[16] The process of quantizing weights involves changing their representation from floating points to a subset of values.[16] Each weight gets turned into a reference into the closest quantized value, which can take up as little as a single byte, or eight bits.[16] Increasing the number of quantized values increases accuracy at the expense of memory and power usage.[16] Researchers have been able to train neural networks on extremely low-precision weights and activations, with each taking as little as one or two bits, compared to the usual thirty-two.[17] Such training lowers power consumption by an order of a magnitude, and performance of weights and activations above one bit compare similarly to thirty-two bit architectures.[17]

Hardware

Since at least 2018, mobile chipmakers have focused on incorporating neural networks into their chip units, from the iPhone's Bionic Chip with a Neural Engine to Huawei's Neural Processing Unit.[18] The Kirin 970 aboard Huawei's P20 was the first to incorporate a separate neural processing unit for deep learning tasks, rather than rely on the CPU or GPU.[19] This was followed by Samsung's Exynos 9810 chip[4] and Apple's A11 chip, which uses two separate cores for neural computation, which it uses in FaceID.[20] Notably, hardware acceleration for deep learning tasks for modern chips includes quantization up to one bit.[19] Additionally, mobile chipmaker Qualcomm has filed a patent for a method to jointly prune and quantize a deep neural network based on the operational abilities of a device.[21] Pure performance increases of mobile chips have fueled the surge of neural networks in smartphones: The neural engine of Apple's latest chip (A16 Bionic) is capable of 17 trillion operations per second, almost 30 times the speed of the original, introduced five years prior, at 600 billion operations per second.[22]

Implementations

Apple / FaceID

Apple's FaceID facial recognition works by combining cameras with neural networks powered by its Bionic neural engine.[23] Using a dot projector that puts 30,000 points on a person's face and an infrared camera, the hardware system allows for detection of depth, preventing users from unlocking a phone with a picture.[23] Apple reveals that it uses a deep convolutional neural network to power face detection,[3] but does not reveal the model for facial recognition. The process involves an initial setup by looking at the phone and rotating the user's head.[24] The speed of setup indicates that much of the neural network was pretrained.[24] While speculative, a recreation of FaceID was made using a Siamese neural network [7] trained to maximize the distances between faces of different people.[24] Siamese neural networks use twin neural networks that share all weights and can learn to compute distances between inputs, like images, which are mapped onto a low-dimensional feature space.[24] The network is then trained so points from different classes (features of the face) are as far apart as possible.[24] Thus, camera input can be compared to the reference image, which can be updated as appearance changes due to facial hair or makeup, creating a fully functioning neural net that becomes increasingly better at distinguishing between the user's face and others'.[24]

Samsung / Intelligent Scan

On most of its phones, Samsung uses a traditional camera setup to take a picture of the face and analyze its features.[25] Since it has been available since 2011, prior to the convolutional neural network revolution in facial recognition, it is unlikely that Samsung currently uses deep learning for its facial recognition software.[25] Because it has no way to create a 3D map, its current devices can be unlocked with a photograph of the face and may not register if the user changes their appearance significantly.<name="scan" /> For two phones, the Galaxy S8 and the Galaxy S9, Samsung used iris scanning with an infrared camera.[25]. In the Galaxy S9, iris scanning was done with traditional facial recognition as part of its "Intelligent Scan," but can also be fooled with a picture and a contact lense.[25] This was discontinued in later generations because the infrared camera was removed, and it is not clear what processes powered these systems or if neural networks were leveraged.[25] To their credit, Samsung's processors incorporate convolutional neural networks into their design.[26]

Gallery

A demonstration of the convolutional process.

A demonstration of the pooling layer.

A visual of how pruning works.

A visual of how quantizing works.

A diagram of the VGG-16 architecture, a popular CNN model for facial recognition.

References

- ↑ Brownlee, J. (2019, July 5). A gentle introduction to deep learning for face recognition. Machine Learning Mastery. Retrieved November 14, 2022, from https://machinelearningmastery.com/introduction-to-deep-learning-for-face-recognition/

- ↑ Almabdy, S., & Elrefaei, L. (2019). Deep convolutional neural network-based approaches for face recognition. Applied Sciences, 9(20), 4397. https://doi.org/10.3390/app9204397

- ↑ 3.0 3.1 3.2 3.3 Computer Vision Machine Learning Team. (2017, November). An on-device deep neural network for face detection. Apple Machine Learning Research. Retrieved November 14, 2022, from https://machinelearning.apple.com/research/face-detection#1

- ↑ 4.0 4.1 Samsung. (2018). Exynos 9810: Mobile Processor. Samsung Semiconductor Global. Retrieved November 14, 2022, from https://semiconductor.samsung.com/processor/mobile-processor/exynos-9-series-9810/

- ↑ Klosowski, T. (2020, July 15). Facial recognition is everywhere. here's what we can do about it. The New York Times. Retrieved November 14, 2022, from https://www.nytimes.com/wirecutter/blog/how-facial-recognition-works/

- ↑ 6.0 6.1 Enriquez, K. (2018, May 15). (thesis). Faster face detection using Convolutional Neural Networks & the Viola-Jones algorithm. California State University Stanislaus. Retrieved November 14, 2022, from https://www.csustan.edu/sites/default/files/groups/University%20Honors%20Program/Journals/01_enriquez.pdf.

- ↑ Viola, P., & Jones, M. (2001). Rapid object detection using a boosted cascade of Simple features. Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001. https://doi.org/10.1109/cvpr.2001.990517

- ↑ 8.0 8.1 8.2 8.3 8.4 O'Shea, K., & Nash, R. (2015). An introduction to convolutional neural networks. arXiv preprint arXiv:1511.08458.

- ↑ Gurucharan, M. (2022, July 28). Basic CNN architecture: Explaining 5 layers of Convolutional Neural Network. upGrad. Retrieved November 14, 2022, from https://www.upgrad.com/blog/basic-cnn-architecture/#:~:text=other%20advanced%20tasks.-,What%20is%20the%20architecture%20of%20CNN%3F,the%20main%20responsibility%20for%20computation.

- ↑ 10.0 10.1 Mishra, M. (2020, August 26). Convolutional neural networks, explained. Towards Data Science. Retrieved November 14, 2022, from https://towardsdatascience.com/convolutional-neural-networks-explained-9cc5188c4939

- ↑ 11.0 11.1 Saha, S. (2018, December 15). A comprehensive guide to convolutional neural network. Toward Data Science. Retrieved November 14, 2022, from https://towardsdatascience.com/a-comprehensive-guide-to-convolutional-neural-networks-the-eli5-way-3bd2b1164a53

- ↑ Guérin, J., Gibaru, O., Thiery, S., & Nyiri, E. (2018). CNN features are also great at unsupervised classification. Computer Science & Information Technology. https://doi.org/10.5121/csit.2018.80308

- ↑ 13.0 13.1 Khandelwal, R. (2020, May 18). Convolutional Neural Network: Feature map and filter visualization. Toward Data Science. Retrieved November 14, 2022, from https://towardsdatascience.com/convolutional-neural-network-feature-map-and-filter-visualization-f75012a5a49c

- ↑ 14.0 14.1 Tammina, S. (2019). Transfer learning using VGG-16 with deep convolutional neural network for classifying images. International Journal of Scientific and Research Publications (IJSRP), 9(10), 143–150. https://doi.org/10.29322/ijsrp.9.10.2019.p9420

- ↑ 15.0 15.1 15.2 Ma, X., Guo, F. M., Niu, W., Lin, X., Tang, J., Ma, K., ... & Wang, Y. (2020, April). Pconv: The missing but desirable sparsity in dnn weight pruning for real-time execution on mobile devices. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 34, No. 04, pp. 5117-5124).

- ↑ 16.0 16.1 16.2 16.3 Despois, J. (2018, April 24). How smartphones handle huge Neural Networks. Heartbeat. Retrieved November 15, 2022, from https://heartbeat.comet.ml/how-smartphones-manage-to-handle-huge-neural-networks-269debcb243d

- ↑ 17.0 17.1 Hubara, I., Courbariaux, M., Soudry, D., El-Yaniv, R., & Bengio, Y. (2017). Quantized neural networks: Training neural networks with low precision weights and activations. The Journal of Machine Learning Research, 18(1), 6869-6898.

- ↑ Neuromation. (2018, November 30). What's the deal with "Ai chips" in the latest smartphones? Medium. Retrieved November 15, 2022, from https://medium.com/neuromation-blog/whats-the-deal-with-ai-chips-in-the-latest-smartphones-28eb16dc9f45

- ↑ 19.0 19.1 Ignatov, A., Timofte, R., Chou, W., Wang, K., Wu, M., Hartley, T., & Van Gool, L. (2018). Ai benchmark: Running deep neural networks on android smartphones. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops (pp. 0-0).

- ↑ Apple. (2018). iPhone XS Specs. Apple. Retrieved November 15, 2022, from https://web.archive.org/web/20190104041336/https://www.apple.com/iphone-xs/specs/

- ↑ Qualcomm. (2021, March 25). Joint pruning and quantization scheme for deep neural networks patent application. USPTO.report. Retrieved November 15, 2022, from https://uspto.report/patent/app/20210089922

- ↑ Hristov, V. (2022, September 17). A16 Bionic explained: What's new in Apple's pro-grade Mobile chip? Phone Arena. Retrieved November 15, 2022, from https://www.phonearena.com/news/A16-Bionic-explained-whats-new_id142438

- ↑ 23.0 23.1 Tillman, M. (2022, March 4). What is Apple Face ID and how does it work? Pocket-Lint. Retrieved November 15, 2022, from https://www.pocket-lint.com/phones/news/apple/142207-what-is-apple-face-id-and-how-does-it-work

- ↑ 24.0 24.1 24.2 24.3 24.4 24.5 Di Palo, N. (2018, March 12). How I Implemented iPhone X’s FaceID Using Deep Learning in Python. Towards Data Science. Retrieved November 15, 2022, from https://towardsdatascience.com/how-i-implemented-iphone-xs-faceid-using-deep-learning-in-python-d5dbaa128e1d

- ↑ 25.0 25.1 25.2 25.3 25.4 Zdziarski, Z. (2020, December 24). Apple's and Samsung's face unlocking technologies. Zbigatron. Retrieved November 15, 2022, from https://zbigatron.com/apples-and-samsungs-face-unlocking-technologies/

- ↑ Samsung. (n.d.). On-device AI: Mobile AI. Samsung Semiconductor Global. Retrieved November 15, 2022, from https://semiconductor.samsung.com/insights/topic/ai/on-device-ai/