Difference between revisions of "24F Final Project: Neuromorphic Computing"

| Line 14: | Line 14: | ||

== Hardware and Present Applications == | == Hardware and Present Applications == | ||

While a modern lack of complete understanding of the brain's intricacies has restricted the development of full-fledged neuromorphic computers, the past few decades have seen great strides in terms of the neuromorphic hardware developed.[[File:spinnaker.jpg|200px|right|thumb|caption|The SpiNNaker supercomputer]][[File:spinnaker_arch.jpg|200px|right|thumb|caption|SpiNNaker architecture]] Most of this hardware, as may be expected, is made of the semiconductor silicon, along with [https://www.sciencedirect.com/topics/earth-and-planetary-sciences/cmos complementary metal oxide semiconductor (CMOS)] technology. A remarkable breakthrough has been in the form of the University of Manchester's SpiNNaker neuromorphic supercomputer, whose eventual goal is to simulate up to one billion neurons at once. The half-million silicon central processing units (CPUs) in SpiNNAker were developed in 2011, and communicate via a [https://www.geeksforgeeks.org/packet-switched-network-psn-in-networking/ packet-switched network] that mimics the dense neuronal connectivity of the human brain. The computer additionally contains a router that allows units of information to be sent to more than one location. Since the hardware itself brokers all [https://www.techtarget.com/searchnetworking/definition/packet packet] transmission, the computer is able to achieve a bandwidth of up to five billion packets per second; in combination with its fifty-seven thousand nodes arranged in hexagonal arrays and various methods of communication between cores, SpiNNaker is able to achieve ultra-high levels of parallel processing while consuming about 90kW of electrical power: roughly four times the power consumption of the brain.<ref>https://www.nist.gov/blogs/taking-measure/brain-inspired-computing-can-help-us-create-faster-more-energy-efficient#:~:text=The%20human%20brain%20is%20an,just%2020%20watts%20of%20power.</ref> Current SpiNNaker research focuses on finding more efficient hardware for simulating neuronal spiking as it exists in the human brain.<ref>https://apt.cs.manchester.ac.uk/projects/SpiNNaker/project/</ref> | While a modern lack of complete understanding of the brain's intricacies has restricted the development of full-fledged neuromorphic computers, the past few decades have seen great strides in terms of the neuromorphic hardware developed.[[File:spinnaker.jpg|200px|right|thumb|caption|The SpiNNaker supercomputer]][[File:spinnaker_arch.jpg|200px|right|thumb|caption|SpiNNaker architecture]] Most of this hardware, as may be expected, is made of the semiconductor silicon, along with [https://www.sciencedirect.com/topics/earth-and-planetary-sciences/cmos complementary metal oxide semiconductor (CMOS)] technology. A remarkable breakthrough has been in the form of the University of Manchester's SpiNNaker neuromorphic supercomputer, whose eventual goal is to simulate up to one billion neurons at once. The half-million silicon central processing units (CPUs) in SpiNNAker were developed in 2011, and communicate via a [https://www.geeksforgeeks.org/packet-switched-network-psn-in-networking/ packet-switched network] that mimics the dense neuronal connectivity of the human brain. The computer additionally contains a router that allows units of information to be sent to more than one location. Since the hardware itself brokers all [https://www.techtarget.com/searchnetworking/definition/packet packet] transmission, the computer is able to achieve a bandwidth of up to five billion packets per second; in combination with its fifty-seven thousand nodes arranged in hexagonal arrays and various methods of communication between cores, SpiNNaker is able to achieve ultra-high levels of parallel processing while consuming about 90kW of electrical power: roughly four times the power consumption of the brain.<ref>https://www.nist.gov/blogs/taking-measure/brain-inspired-computing-can-help-us-create-faster-more-energy-efficient#:~:text=The%20human%20brain%20is%20an,just%2020%20watts%20of%20power.</ref> Current SpiNNaker research focuses on finding more efficient hardware for simulating neuronal spiking as it exists in the human brain.<ref>https://apt.cs.manchester.ac.uk/projects/SpiNNaker/project/</ref> | ||

| + | |||

| + | Another tangible example of advancement in neuromorphic computing, BrainScaleS, provides a model for one of the most state-of-the-art, large-scale analog spiking neural networks. Utilizing programmable plasticity units (PPUs), a type of custom CPU, BrainScale simulates synaptic plasticity at one thousand times the speed observed in the human brain. The system is comprised of twenty silicon wafers, each with fifty million plastic synapses and two hundred thousand realistic neurons, and evolves its code based on the physical properties of the hardware. The high speed relative to that of the human brain, due to the fact that the circuits are physically shorter than those observed in the brain, require much less power input than other simulated neuronal networks. | ||

== Limitations and Future Research == | == Limitations and Future Research == | ||

Revision as of 07:26, 22 October 2022

By Chris Mecane

Neuromorphic computing, also known as neuromorphic engineering, is a fairly recent development in the realm of computational neuroscience. The goal of neuromorphic computing is to construct computers whose hardware and software mimic the anatomy and physiology of the human brain via replication of neural structure and emulation of synaptic communication.[1] Though still in its nascent years, the field is a source of hope for the future of computing and artificial intelligence.

Contents

Background

Though neuromorphic computing presently remains no more than a promising concept, it is agreed upon that the foundations of the field were laid down by Caltech's Carver Mead in the late 1980s.[2] Using human neurobiology as the model, Mead contrasted the brain's hierarchical encoding of information, such as its ability to instantly integrate sensory perception as an event with an associated emotion, with a computer's glaring lack of an ability to encode in this way; he further noted the brain alone's capacity to combine signal processing with gain control. Mead nonetheless recognized the glaring need to better understand the brain before an accurately-designed neuromorphic computer could be realized; he emphasizes the fact that it is barely understood how the brain, for some given amount of energy output, is able to perform many times more computations than even the most advanced computer.[3] Mead himself, along with his PhD student Misha Mahowald, provided the first practical example of the potential of neuromorphic computing through their development of a silicon retina in 1991, which successfully imitated output signals seen in true human retinas most notably in response to moving images.[4]

How Neuromorphic Computing Works

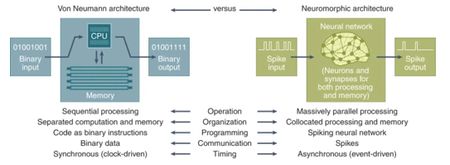

Neuromorphic computers are partially defined by the fact that they are different from the widespread von Neumann computers, which have distinct CPUs and memory units and store data as binary. In contrast, neuromorphic computers, in their efforts to process information analogously to the human brain, integrate memory and processing into a single mechanism regulated by its neurons and synapses[5]; neurons receive "spikes" of information, with the timing, magnitude, and shape of the spike all being meaningful attributes in the encoding of numerical information. As such, neuromorphic computers are said to be modeled using spiking neural networks (SSNs); spiking neurons behave similarly to biological neurons, in that they factor in characteristics such as threshold values for neuronal activation and synaptic weights which can change over time.[1] The aforementioned features can all be found in existing, standard neural networks that are capable of continual learning, such as perceptrons. Neuromorphic computers, however, would surpass these traditional networks in their ability to incorporate neuronal and synaptic delay; as information flows in, "charge" accumulates in the neurons until the surpassing of some charge threshold produces a "spike," or action potential. If the charge does not exceed the threshold over some given time period, it "leaks."[1] The neuronal and synaptic makeup of neuromorphic computers additionally allows for, unlike traditional computers, for many parallel operations to be running in different neurons at a given time; van Neumann computers utilize sequential processing of information. Through their parallel processing, along with their integration of processing and memory, neuromorphic computers provide a glimpse into a future full of vastly more energy-efficient computation.[5]

Hardware and Present Applications

While a modern lack of complete understanding of the brain's intricacies has restricted the development of full-fledged neuromorphic computers, the past few decades have seen great strides in terms of the neuromorphic hardware developed. Most of this hardware, as may be expected, is made of the semiconductor silicon, along with complementary metal oxide semiconductor (CMOS) technology. A remarkable breakthrough has been in the form of the University of Manchester's SpiNNaker neuromorphic supercomputer, whose eventual goal is to simulate up to one billion neurons at once. The half-million silicon central processing units (CPUs) in SpiNNAker were developed in 2011, and communicate via a packet-switched network that mimics the dense neuronal connectivity of the human brain. The computer additionally contains a router that allows units of information to be sent to more than one location. Since the hardware itself brokers all packet transmission, the computer is able to achieve a bandwidth of up to five billion packets per second; in combination with its fifty-seven thousand nodes arranged in hexagonal arrays and various methods of communication between cores, SpiNNaker is able to achieve ultra-high levels of parallel processing while consuming about 90kW of electrical power: roughly four times the power consumption of the brain.[6] Current SpiNNaker research focuses on finding more efficient hardware for simulating neuronal spiking as it exists in the human brain.[7]Another tangible example of advancement in neuromorphic computing, BrainScaleS, provides a model for one of the most state-of-the-art, large-scale analog spiking neural networks. Utilizing programmable plasticity units (PPUs), a type of custom CPU, BrainScale simulates synaptic plasticity at one thousand times the speed observed in the human brain. The system is comprised of twenty silicon wafers, each with fifty million plastic synapses and two hundred thousand realistic neurons, and evolves its code based on the physical properties of the hardware. The high speed relative to that of the human brain, due to the fact that the circuits are physically shorter than those observed in the brain, require much less power input than other simulated neuronal networks.

Limitations and Future Research

References

- ↑ 1.0 1.1 1.2 https://www.ibm.com/think/topics/neuromorphic-computing

- ↑ https://iopscience.iop.org/article/10.1088/1741-2560/13/5/051001/meta

- ↑ https://spie.org/news/photonics-focus/septoct-2024/inventing-the-integrated-circuit#_=_]

- ↑ https://tilde.ini.uzh.ch/~tobi/wiki/lib/exe/fetch.php?media=mahowaldmeadsiliconretinasciam1991color.pdf

- ↑ 5.0 5.1 5.2 https://www.nature.com/articles/s43588-021-00184-y]

- ↑ https://www.nist.gov/blogs/taking-measure/brain-inspired-computing-can-help-us-create-faster-more-energy-efficient#:~:text=The%20human%20brain%20is%20an,just%2020%20watts%20of%20power.

- ↑ https://apt.cs.manchester.ac.uk/projects/SpiNNaker/project/