Difference between revisions of "24F Final Project: Overfitting"

(→Overfitting) |

(→Overfitting) |

||

| Line 9: | Line 9: | ||

Overfitting occurs when a machine learning model captures not only the underlying patterns in the training data, but the random noise or errors as well. This tends to happen when the model trains for too long on sample data or when the model is too complex. When a model is excessively trained on a limited dataset, it leads to the model memorizing the specific data points rather than learning the underlying patterns of the dataset.<ref name=”lark” /> Complex models with a large number of parameters are also conducive for overfitting in that they have the capacity to learn intricate details, including noise, from the training data.<ref name=”lark” /> | Overfitting occurs when a machine learning model captures not only the underlying patterns in the training data, but the random noise or errors as well. This tends to happen when the model trains for too long on sample data or when the model is too complex. When a model is excessively trained on a limited dataset, it leads to the model memorizing the specific data points rather than learning the underlying patterns of the dataset.<ref name=”lark” /> Complex models with a large number of parameters are also conducive for overfitting in that they have the capacity to learn intricate details, including noise, from the training data.<ref name=”lark” /> | ||

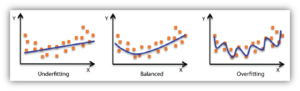

| − | With this in mind, one may logically think that ending the training process earlier, known as “early stopping,” or reducing complexity in the model would prevent overfitting; however, pausing training too early or excluding too many important features may result in the opposite problem: underfitting.<ref name=”ibm” /> Underfitting describes a model which does not capture the underlying relationship in the dataset which it was trained on.<ref name=”domino”> https://domino.ai/data-science-dictionary/underfitting</ref> While not training for long enough or being trained with poorly chosen [https://en.wikipedia.org/wiki/Hyperparameter_(machine_learning) hyperparameters] does lead to underfitting, the most common reason that models underfit is because they exhibit too much bias.<ref name=”domino” /> Basically, an underfit model will exhibit high bias and low variance, meaning it will generate reliably inaccurate predictions - while reliability is desirable, inaccuracy is not.<ref name=”domino” /> On the other hand, overfitting results in models that have lower bias but increased variance.<ref name=”ibm” /> Both overfitting and underfitting mean the model cannot establish the dominant pattern within the training data and, as a result, cannot generalize well to new data. This leads us to the concept called the [https://en.wikipedia.org/wiki/Bias%E2%80%93variance_tradeoff bias-variance tradeoff], which describes the need to balance between underfitting and overfitting when one is devising a model that performs well; you are essentially trading off between the bias and variance components of a model’s error so that neither becomes overwhelming.<ref name=”domino” /> Tuning a model away from either underfitting or overfitting pushes it closer towards the other issue. | + | With this in mind, one may logically think that ending the training process earlier, known as “early stopping,” or reducing complexity in the model would prevent overfitting; however, pausing training too early or excluding too many important features may result in the opposite problem: underfitting.<ref name=”ibm” /> Underfitting describes a model which does not capture the underlying relationship in the dataset which it was trained on.<ref name=”domino”> https://domino.ai/data-science-dictionary/underfitting</ref> While not training for long enough or being trained with poorly chosen [https://en.wikipedia.org/wiki/Hyperparameter_(machine_learning) hyperparameters] does lead to underfitting, the most common reason that models underfit is because they exhibit too much bias.<ref name=”domino” /> Basically, an underfit model will exhibit high bias and low variance, meaning it will generate reliably inaccurate predictions - while reliability is desirable, inaccuracy is not.<ref name=”domino” /> On the other hand, overfitting results in models that have lower bias but increased variance.<ref name=”ibm” /> [[File:Underfitting_overfitting_example.png|right|300px|caption|Figure illustrating underfitting vs. overfitting]]Both overfitting and underfitting mean the model cannot establish the dominant pattern within the training data and, as a result, cannot generalize well to new data. This leads us to the concept called the [https://en.wikipedia.org/wiki/Bias%E2%80%93variance_tradeoff bias-variance tradeoff], which describes the need to balance between underfitting and overfitting when one is devising a model that performs well; you are essentially trading off between the bias and variance components of a model’s error so that neither becomes overwhelming.<ref name=”domino” /> Tuning a model away from either underfitting or overfitting pushes it closer towards the other issue. |

| − | |||

== References == | == References == | ||

<references /> | <references /> | ||

Revision as of 07:27, 22 October 2022

By Thomas Zhang

Overfitting is a phenomenon in machine learning which occurs when a learning algorithm fits too closely (or even exactly) to its training data, resulting in a model that is unable to make accurate predictions on new data.[1] More generally, it means that a machine learning model has learned the training data too well, including “noise,” or irrelevant information, and random fluctuations, leading to decreased performance when presented with new data. This is a major problem as the ability of machine learning models to make predictions/decisions and classify data has many real-world applications; overfitting interferes with a model’s ability to generalize well to new data, directly affecting its ability to do the classification and prediction tasks it was intended for.[1]

Background/History

The term “overfitting” first originated in the field of statistics, with this subject being extensively studied in the context of regression analysis and pattern recognition; however, with the arrival of artificial intelligence and machine learning, this phenomenon has been subject to increased attention due to its important implications on the performance of AI models.[2] Since its early days, the concept has evolved significantly, with researchers continuously endeavoring to develop methods to mitigate overfitting’s adverse effects on the accuracy of models and their ability to generalize.[2]

Overfitting

Overfitting occurs when a machine learning model captures not only the underlying patterns in the training data, but the random noise or errors as well. This tends to happen when the model trains for too long on sample data or when the model is too complex. When a model is excessively trained on a limited dataset, it leads to the model memorizing the specific data points rather than learning the underlying patterns of the dataset.[2] Complex models with a large number of parameters are also conducive for overfitting in that they have the capacity to learn intricate details, including noise, from the training data.[2]

With this in mind, one may logically think that ending the training process earlier, known as “early stopping,” or reducing complexity in the model would prevent overfitting; however, pausing training too early or excluding too many important features may result in the opposite problem: underfitting.[1] Underfitting describes a model which does not capture the underlying relationship in the dataset which it was trained on.[3] While not training for long enough or being trained with poorly chosen hyperparameters does lead to underfitting, the most common reason that models underfit is because they exhibit too much bias.[3] Basically, an underfit model will exhibit high bias and low variance, meaning it will generate reliably inaccurate predictions - while reliability is desirable, inaccuracy is not.[3] On the other hand, overfitting results in models that have lower bias but increased variance.[1] Both overfitting and underfitting mean the model cannot establish the dominant pattern within the training data and, as a result, cannot generalize well to new data. This leads us to the concept called the bias-variance tradeoff, which describes the need to balance between underfitting and overfitting when one is devising a model that performs well; you are essentially trading off between the bias and variance components of a model’s error so that neither becomes overwhelming.[3] Tuning a model away from either underfitting or overfitting pushes it closer towards the other issue.References

- ↑ 1.0 1.1 1.2 1.3 https://www.ibm.com/topics/overfitting

- ↑ 2.0 2.1 2.2 2.3 Lark Editorial Team. (2023, Dec 26). Overfitting. Lark Suite. https://www.larksuite.com/en_us/topics/ai-glossary/overfitting

- ↑ 3.0 3.1 3.2 3.3 https://domino.ai/data-science-dictionary/underfitting