Difference between revisions of "24F Final Project: Neuromorphic Computing"

(→Limitations and Future Research) |

|||

| (11 intermediate revisions by the same user not shown) | |||

| Line 4: | Line 4: | ||

== Background == | == Background == | ||

| − | Though neuromorphic computing presently remains no more than a promising concept, it is agreed upon that the foundations of the field were laid down by Caltech's Carver Mead in the late 1980s.<ref>https:// | + | Though mainstream neuromorphic computing presently remains no more than a promising concept, it is agreed upon that the foundations of the field were laid down by Caltech's Carver Mead in the late 1980s.<ref>Furber, S. (2016). Large-scale neuromorphic computing systems. Journal of Neural Engineering, 13(5), 051001. https://doi.org/10.1088/1741-2560/13/5/051001 </ref> Using human neurobiology as the model, Mead contrasted the brain's hierarchical encoding of information, such as its ability to instantly integrate sensory perception as an event with an associated emotion, with a computer's glaring lack of an ability to encode in this way; he further noted the brain alone's capacity to combine signal processing with [https://www.cell.com/current-biology/fulltext/S0960-9822(23)01442-2#:~:text=Gain%20control%20is%20a%20process,needed%20or%20how%20they%20interact. gain control]. Mead nonetheless recognized the glaring need to better understand the brain before an accurately-designed neuromorphic computer could be realized; he emphasizes the fact that it is barely understood how the brain, for some given amount of energy output, is able to perform many times more computations than even the most advanced computer.<ref>Carver Mead: Microelectronics, Neuromorphic Computing, and life at the Frontiers of Science and Technology. SPIE, the international society for optics and photonics. (n.d.). https://spie.org/news/photonics-focus/septoct-2024/inventing-the-integrated-circuit#_=_ </ref> Mead himself, along with his PhD student Misha Mahowald, provided the first practical example of the potential of neuromorphic computing through their development of a [https://redwood.berkeley.edu/wp-content/uploads/2018/08/Mead-chapter15-silicon-retina.pdf silicon retina] in 1991, which successfully imitated output signals seen in true human retinas most notably in response to moving images.<ref>Mahowald, M. A., & Mead, C. (1991). The Silicon Retina. Scientific American, 264(5), 76–83. http://www.jstor.org/stable/24936904</ref> |

== How Neuromorphic Computing Works == | == How Neuromorphic Computing Works == | ||

| − | Neuromorphic computers are partially defined by the fact that they are different from the widespread [https://www.sciencedirect.com/topics/computer-science/von-neumann-architecture von Neumann computers], which have distinct CPUs and memory units and store data as binary. In contrast, neuromorphic computers, in their efforts to process information analogously to the human brain, integrate memory and processing into a single mechanism [[File:Snn_vs_vn.jpg|450px|left]]regulated by its neurons and synapses<ref name="nature">https:// | + | Neuromorphic computers are partially defined by the fact that they are different from the widespread [https://www.sciencedirect.com/topics/computer-science/von-neumann-architecture von Neumann computers], which have distinct CPUs and memory units and store data as binary. In contrast, neuromorphic computers, in their efforts to process information analogously to the human brain, integrate memory and processing into a single mechanism [[File:Snn_vs_vn.jpg|450px|left]]regulated by its neurons and synapses<ref name="nature">Schuman, C.D., Kulkarni, S.R., Parsa, M. et al. Opportunities for neuromorphic computing algorithms and applications. Nat Comput Sci 2, 10–19 (2022). https://doi.org/10.1038/s43588-021-00184-y</ref>; neurons receive "spikes" of information, with the timing, magnitude, and shape of the spike all being meaningful attributes in the encoding of numerical information. As such, neuromorphic computers are said to be modeled using spiking neural networks (SSNs); spiking neurons behave similarly to biological neurons, in that they factor in characteristics such as threshold values for neuronal activation and [https://www.sciencedirect.com/topics/computer-science/synaptic-weight synaptic weights] which can change over time.<ref name="ibm"/> The aforementioned features can all be found in existing, standard neural networks that are capable of continual learning, such as [https://www.sciencedirect.com/topics/neuroscience/perceptron perceptrons]. Neuromorphic computers, however, would surpass these traditional networks in their ability to incorporate neuronal and synaptic delay; as information flows in, "charge" accumulates in the neurons until the surpassing of some charge threshold produces a "spike," or [https://www.ncbi.nlm.nih.gov/books/NBK538143/#:~:text=An%20action%20potential%20is%20a,the%20permeability%20of%20each%20ion. action potential]. If the charge does not exceed the threshold over some given time period, it "leaks."<ref name="ibm"/> The neuronal and synaptic makeup of neuromorphic computers additionally allows for, unlike traditional computers, for many parallel operations to be running in different neurons at a given time; van Neumann computers utilize sequential processing of information. Through their parallel processing, along with their integration of processing and memory, neuromorphic computers provide a glimpse into a future full of vastly more energy-efficient computation.<ref name="nature"/> |

| Line 13: | Line 13: | ||

== Hardware and Present Applications == | == Hardware and Present Applications == | ||

| − | While a modern lack of complete understanding of the brain's intricacies has restricted the development of full-fledged neuromorphic computers, the past few decades have seen great strides in terms of the neuromorphic hardware developed.[[File:spinnaker.jpg|200px|right|thumb|caption|The SpiNNaker supercomputer]][[File:spinnaker_arch.jpg|200px|right|thumb|caption|SpiNNaker architecture]] Most of this hardware, as may be expected, is made of the semiconductor silicon, along with [https://www.sciencedirect.com/topics/earth-and-planetary-sciences/cmos complementary metal oxide semiconductor (CMOS)] technology. A remarkable breakthrough has been in the form of the University of Manchester's SpiNNaker neuromorphic supercomputer, whose eventual goal is to simulate up to one billion neurons at once. The half-million silicon central processing units (CPUs) in SpiNNAker were developed in 2011, and communicate via a [https://www.geeksforgeeks.org/packet-switched-network-psn-in-networking/ packet-switched network] that mimics the dense neuronal connectivity of the human brain. The computer additionally contains a router that allows units of information to be sent to more than one location. Since the hardware itself brokers all [https://www.techtarget.com/searchnetworking/definition/packet packet] transmission, the computer is able to achieve a bandwidth of up to five billion packets per second; in combination with its fifty-seven thousand nodes arranged in hexagonal arrays and various methods of communication between cores, SpiNNaker is able to achieve ultra-high levels of parallel processing while consuming about 90kW of electrical power: roughly four times the power consumption of the brain.<ref>https://www.nist.gov/blogs/taking-measure/brain-inspired-computing-can-help-us-create-faster-more-energy-efficient#:~:text=The%20human%20brain%20is%20an,just%2020%20watts%20of%20power | + | While a modern lack of complete understanding of the brain's intricacies has restricted the development of full-fledged neuromorphic computers, the past few decades have seen great strides in terms of the neuromorphic hardware developed.[[File:spinnaker.jpg|200px|right|thumb|caption|The SpiNNaker supercomputer]][[File:spinnaker_arch.jpg|200px|right|thumb|caption|SpiNNaker architecture]] Most of this hardware, as may be expected, is made of the semiconductor silicon, along with [https://www.sciencedirect.com/topics/earth-and-planetary-sciences/cmos complementary metal oxide semiconductor (CMOS)] technology. A remarkable breakthrough has been in the form of the University of Manchester's SpiNNaker neuromorphic supercomputer, whose eventual goal is to simulate up to one billion neurons at once. The half-million silicon central processing units (CPUs) in SpiNNAker were developed in 2011, and communicate via a [https://www.geeksforgeeks.org/packet-switched-network-psn-in-networking/ packet-switched network] that mimics the dense neuronal connectivity of the human brain. The computer additionally contains a router that allows units of information to be sent to more than one location. Since the hardware itself brokers all [https://www.techtarget.com/searchnetworking/definition/packet packet] transmission, the computer is able to achieve a bandwidth of up to five billion packets per second; in combination with its fifty-seven thousand nodes arranged in hexagonal arrays and various methods of communication between cores, SpiNNaker is able to achieve ultra-high levels of parallel processing while consuming about 90kW of electrical power: roughly four times the power consumption of the brain.<ref>Madhavan, A. (2023, September 13). Brain-inspired computing can help us create faster, more energy-efficient devices - if we win the race. NIST. https://www.nist.gov/blogs/taking-measure/brain-inspired-computing-can-help-us-create-faster-more-energy-efficient#:~:text=The%20human%20brain%20is%20an,just%2020%20watts%20of%20power </ref> Current SpiNNaker research focuses on finding more efficient hardware for simulating neuronal spiking as it exists in the human brain.<ref>Spinnaker Project. APT. (n.d.). https://apt.cs.manchester.ac.uk/projects/SpiNNaker/project/ </ref> |

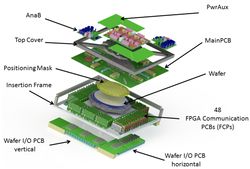

| − | [[File:wafer.jpg|left|250px|thumb|caption|The wafer of BrainScaleS]]Another tangible example of advancement in neuromorphic computing, BrainScaleS, provides a model for one of the most state-of-the-art, large-scale analog spiking neural networks. Utilizing programmable plasticity units (PPUs), a type of custom CPU, BrainScale simulates synaptic plasticity at one thousand times the speed observed in the human brain. The system is comprised of twenty silicon wafers, each with fifty million plastic synapses and two hundred thousand realistic neurons, and evolves its code based on the physical properties of the hardware. The wafer are constructed of High Input Count Analog Neural Network chips (HICANNs), which simulate actively-changing neurons and synapses. The high speed of this model relative to that of the human brain, due to the fact that the model's circuits are physically shorter than those observed in the brain, permits that it requires much less power input than other simulated neuronal networks. Synaptic weights are determined by the current received by the associated neuron.<ref>https://electronicvisions.github.io/hbp-sp9-guidebook/pm/pm_hardware_configuration.html</ref><ref>https://www.humanbrainproject.eu/en/science-development/focus-areas/neuromorphic-computing/hardware/</ref> | + | [[File:wafer.jpg|left|250px|thumb|caption|The wafer of BrainScaleS]]Another tangible example of advancement in neuromorphic computing, BrainScaleS, provides a model for one of the most state-of-the-art, large-scale analog spiking neural networks. Utilizing programmable plasticity units (PPUs), a type of custom CPU, BrainScale simulates synaptic plasticity at one thousand times the speed observed in the human brain. The system is comprised of twenty silicon wafers, each with fifty million plastic synapses and two hundred thousand realistic neurons, and evolves its code based on the physical properties of the hardware. The wafer are constructed of High Input Count Analog Neural Network chips (HICANNs), which simulate actively-changing neurons and synapses. The high speed of this model relative to that of the human brain, due to the fact that the model's circuits are physically shorter than those observed in the brain, permits that it requires much less power input than other simulated neuronal networks. Synaptic weights are determined by the current received by the associated neuron.<ref>About the brainscales hardware¶. About the BrainScaleS hardware - HBP Neuromorphic Computing Platform Guidebook (WIP). (n.d.). https://electronicvisions.github.io/hbp-sp9-guidebook/pm/pm_hardware_configuration.html </ref><ref>Hardware. (n.d.). https://www.humanbrainproject.eu/en/science-development/focus-areas/neuromorphic-computing/hardware/ </ref> |

| − | Intel's [https://www.intel.com/content/www/us/en/newsroom/news/intel-unveils-neuromorphic-loihi-2-lava-software.html#gs.i83ekc Loihi 2] neuromorphic processor provides a perhaps even more impressive illustration of neuromorphic computing; released in 2021, the research chip simulates one million neurons at once, utilizing a spiking neural network that evolves its synapses using variations of backpropagation. Intel looks to commercialize this chip alongside the future advent of mainstream neuromorphic computing.<ref>https://www.intel.com/content/www/us/en/newsroom/news/intel-unveils-neuromorphic-loihi-2-lava-software.html#gs.i83ekc</ref> | + | |

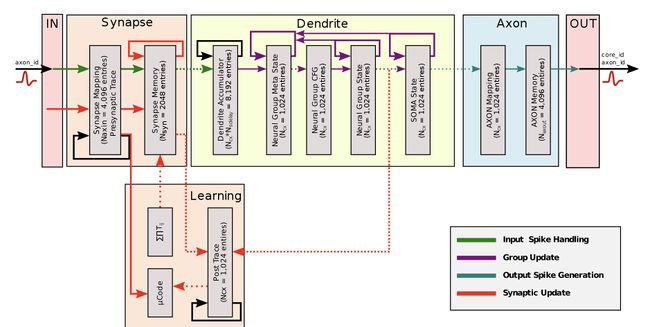

| + | Intel's [https://www.intel.com/content/www/us/en/newsroom/news/intel-unveils-neuromorphic-loihi-2-lava-software.html#gs.i83ekc Loihi 2] neuromorphic processor provides a perhaps even more impressive illustration of neuromorphic computing; released in 2021, the research chip contains one hundred and twenty-eight cores and simulates one million neurons at once,[[File:loihi.jpg|650px|right|thumb|caption|Loihi's connectivity]] utilizing a spiking neural network that evolves its synapses using variations of backpropagation. With the chip utilizing a spiking neural network (SSN), each "neuron" is regulated by its own ruleset that modulates how the timing of its spiking evolves over time. When a spike enters a neuron, the neuron calculates whether the criteria are met for it to subsequently send its own spike onward. The chip is parallelized such that larger events can be processed simultaneously as several smaller events for more efficient computing. Implementing a type of current-based synapse (CUBA) leaky integrate-and-fire neuron model, the chip includes a "membrane potential" that grows weaker over time; if the weighted sum of the input spikes surpasses the firing threshold of a neuron, the neuron itself will subsequently propagate its own spike. Every core in the Loihi chips is fit with a "learning engine" that regulates spike timing and strength by modulating the strengths of individual synapses. Since learning occurs for every [https://u-next.com/blogs/machine-learning/epoch-in-machine-learning/#:~:text=An%20epoch%20in%20machine%20learning,learning%20process%20of%20the%20algorithm. learning epoch], the chips is not confined to any particular architecture; for instance, it can exist as either [https://clickup.com/blog/supervised-vs-unsupervised-machine-learning/?utm_source=google-pmax&utm_medium=cpc&utm_campaign=gpm_cpc_ar_nnc_pro_trial_all-devices_tcpa_lp_x_all-departments_x_pmax-fltv&utm_content=&utm_creative=_____&gad_source=1&gclid=CjwKCAiAxea5BhBeEiwAh4t5Kz1qBvJvoX7lm-S9-yq6-Cjv0LRiHdE1bwZgzJ6KzeAD6BSdj-ARixoCwTYQAvD_BwE supervised or unsupervised] network. The Loihi chip is considered the first neuromorphic chip to use a fully integrated SNN, integrating key features including variable synapses and a population-based hierarchical connectivity in which the chip utilizes subnetworks to minimize the need for chip-wide connectivity and maximize its efficiency with respect to mapping the networks. Intel looks to commercialize this chip alongside the future advent of mainstream neuromorphic computing.<ref>By. (n.d.-b). Intel advances neuromorphic with Loihi 2, New Lava Software framework... Intel. https://www.intel.com/content/www/us/en/newsroom/news/intel-unveils-neuromorphic-loihi-2-lava-software.html#gs.i83ekc </ref><ref>Loihi - Intel. WikiChip. (n.d.). https://en.wikichip.org/wiki/intel/loihi#:~:text=Loihi%20(pronounced%20low%2Dee%2D,and%20inference%20with%20high%20efficiency.</ref> | ||

== Limitations and Future Research == | == Limitations and Future Research == | ||

| + | Up to the present, neuromorphic hardware, and more broadly neuromorphic computers, have serve only demonstrative purposes and have yet to be mobilized toward pragmatic application. While, through their scale and parallel processing abilities, neuromorphic computers have been shown to vastly improve energy efficiency relative to other neural hardware and von Neumann computers, they have yet to perform to a substantially higher degree of accuracy than other deep learning models.<ref name="nature"/> Lack of established standards with respect to architecture, hardware, software, sample datasets, testing tasks, and testable metrics have made it hard to conclude on the efficacy of these networks.<ref name="ibm"/> [https://www.ibm.com/us-en?utm_content=SRCWW&p1=Search&p4=43700050478421002&p5=e&p9=58700005517036100&gclid=CjwKCAiAxea5BhBeEiwAh4t5Kx-75ChUTh9DW3CuNpeh2sAetor0a8NDhRdlab3En1jdhycnFTluxRoCVXUQAvD_BwE&gclsrc=aw.ds IBM] has additionally cited neuromorphic computers' potential viability in the improvement of autonomous vehicle navigation, identification of cyberattacks, understanding of natural language acquisition, and robot learning.<ref name="ibm"/> Much recent research revolves around the development of more advanced CPUs, circuits, and other hardware that are capable of quickly, accurately, and efficiently running AI models in order to ever more accurately mimic the architecture and processing of the human brain.<ref>Hess, P. (2024, November 7). How neuromorphic computing takes inspiration from our brains. IBM Research. https://research.ibm.com/blog/what-is-neuromorphic-or-brain-inspired-computing</ref> | ||

==References== | ==References== | ||

<references /> | <references /> | ||

Latest revision as of 06:27, 22 October 2022

By Chris Mecane

Neuromorphic computing, also known as neuromorphic engineering, is a fairly recent development in the realm of computational neuroscience. The goal of neuromorphic computing is to construct computers whose hardware and software mimic the anatomy and physiology of the human brain via replication of neural structure and emulation of synaptic communication.[1] Though still in its nascent years, the field is a source of hope for the future of computing and artificial intelligence.

Contents

Background

Though mainstream neuromorphic computing presently remains no more than a promising concept, it is agreed upon that the foundations of the field were laid down by Caltech's Carver Mead in the late 1980s.[2] Using human neurobiology as the model, Mead contrasted the brain's hierarchical encoding of information, such as its ability to instantly integrate sensory perception as an event with an associated emotion, with a computer's glaring lack of an ability to encode in this way; he further noted the brain alone's capacity to combine signal processing with gain control. Mead nonetheless recognized the glaring need to better understand the brain before an accurately-designed neuromorphic computer could be realized; he emphasizes the fact that it is barely understood how the brain, for some given amount of energy output, is able to perform many times more computations than even the most advanced computer.[3] Mead himself, along with his PhD student Misha Mahowald, provided the first practical example of the potential of neuromorphic computing through their development of a silicon retina in 1991, which successfully imitated output signals seen in true human retinas most notably in response to moving images.[4]

How Neuromorphic Computing Works

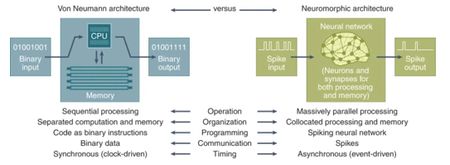

Neuromorphic computers are partially defined by the fact that they are different from the widespread von Neumann computers, which have distinct CPUs and memory units and store data as binary. In contrast, neuromorphic computers, in their efforts to process information analogously to the human brain, integrate memory and processing into a single mechanism regulated by its neurons and synapses[5]; neurons receive "spikes" of information, with the timing, magnitude, and shape of the spike all being meaningful attributes in the encoding of numerical information. As such, neuromorphic computers are said to be modeled using spiking neural networks (SSNs); spiking neurons behave similarly to biological neurons, in that they factor in characteristics such as threshold values for neuronal activation and synaptic weights which can change over time.[1] The aforementioned features can all be found in existing, standard neural networks that are capable of continual learning, such as perceptrons. Neuromorphic computers, however, would surpass these traditional networks in their ability to incorporate neuronal and synaptic delay; as information flows in, "charge" accumulates in the neurons until the surpassing of some charge threshold produces a "spike," or action potential. If the charge does not exceed the threshold over some given time period, it "leaks."[1] The neuronal and synaptic makeup of neuromorphic computers additionally allows for, unlike traditional computers, for many parallel operations to be running in different neurons at a given time; van Neumann computers utilize sequential processing of information. Through their parallel processing, along with their integration of processing and memory, neuromorphic computers provide a glimpse into a future full of vastly more energy-efficient computation.[5]

Hardware and Present Applications

While a modern lack of complete understanding of the brain's intricacies has restricted the development of full-fledged neuromorphic computers, the past few decades have seen great strides in terms of the neuromorphic hardware developed. Most of this hardware, as may be expected, is made of the semiconductor silicon, along with complementary metal oxide semiconductor (CMOS) technology. A remarkable breakthrough has been in the form of the University of Manchester's SpiNNaker neuromorphic supercomputer, whose eventual goal is to simulate up to one billion neurons at once. The half-million silicon central processing units (CPUs) in SpiNNAker were developed in 2011, and communicate via a packet-switched network that mimics the dense neuronal connectivity of the human brain. The computer additionally contains a router that allows units of information to be sent to more than one location. Since the hardware itself brokers all packet transmission, the computer is able to achieve a bandwidth of up to five billion packets per second; in combination with its fifty-seven thousand nodes arranged in hexagonal arrays and various methods of communication between cores, SpiNNaker is able to achieve ultra-high levels of parallel processing while consuming about 90kW of electrical power: roughly four times the power consumption of the brain.[6] Current SpiNNaker research focuses on finding more efficient hardware for simulating neuronal spiking as it exists in the human brain.[7]

Limitations and Future Research

Up to the present, neuromorphic hardware, and more broadly neuromorphic computers, have serve only demonstrative purposes and have yet to be mobilized toward pragmatic application. While, through their scale and parallel processing abilities, neuromorphic computers have been shown to vastly improve energy efficiency relative to other neural hardware and von Neumann computers, they have yet to perform to a substantially higher degree of accuracy than other deep learning models.[5] Lack of established standards with respect to architecture, hardware, software, sample datasets, testing tasks, and testable metrics have made it hard to conclude on the efficacy of these networks.[1] IBM has additionally cited neuromorphic computers' potential viability in the improvement of autonomous vehicle navigation, identification of cyberattacks, understanding of natural language acquisition, and robot learning.[1] Much recent research revolves around the development of more advanced CPUs, circuits, and other hardware that are capable of quickly, accurately, and efficiently running AI models in order to ever more accurately mimic the architecture and processing of the human brain.[12]

References

- ↑ 1.0 1.1 1.2 1.3 1.4 Caballar, R., & Stryker, C. (2024, September 3). What is neuromorphic computing?. IBM. https://www.ibm.com/think/topics/neuromorphic-computing

- ↑ Furber, S. (2016). Large-scale neuromorphic computing systems. Journal of Neural Engineering, 13(5), 051001. https://doi.org/10.1088/1741-2560/13/5/051001

- ↑ Carver Mead: Microelectronics, Neuromorphic Computing, and life at the Frontiers of Science and Technology. SPIE, the international society for optics and photonics. (n.d.). https://spie.org/news/photonics-focus/septoct-2024/inventing-the-integrated-circuit#_=_

- ↑ Mahowald, M. A., & Mead, C. (1991). The Silicon Retina. Scientific American, 264(5), 76–83. http://www.jstor.org/stable/24936904

- ↑ 5.0 5.1 5.2 5.3 Schuman, C.D., Kulkarni, S.R., Parsa, M. et al. Opportunities for neuromorphic computing algorithms and applications. Nat Comput Sci 2, 10–19 (2022). https://doi.org/10.1038/s43588-021-00184-y

- ↑ Madhavan, A. (2023, September 13). Brain-inspired computing can help us create faster, more energy-efficient devices - if we win the race. NIST. https://www.nist.gov/blogs/taking-measure/brain-inspired-computing-can-help-us-create-faster-more-energy-efficient#:~:text=The%20human%20brain%20is%20an,just%2020%20watts%20of%20power

- ↑ Spinnaker Project. APT. (n.d.). https://apt.cs.manchester.ac.uk/projects/SpiNNaker/project/

- ↑ About the brainscales hardware¶. About the BrainScaleS hardware - HBP Neuromorphic Computing Platform Guidebook (WIP). (n.d.). https://electronicvisions.github.io/hbp-sp9-guidebook/pm/pm_hardware_configuration.html

- ↑ Hardware. (n.d.). https://www.humanbrainproject.eu/en/science-development/focus-areas/neuromorphic-computing/hardware/

- ↑ By. (n.d.-b). Intel advances neuromorphic with Loihi 2, New Lava Software framework... Intel. https://www.intel.com/content/www/us/en/newsroom/news/intel-unveils-neuromorphic-loihi-2-lava-software.html#gs.i83ekc

- ↑ Loihi - Intel. WikiChip. (n.d.). https://en.wikichip.org/wiki/intel/loihi#:~:text=Loihi%20(pronounced%20low%2Dee%2D,and%20inference%20with%20high%20efficiency.

- ↑ Hess, P. (2024, November 7). How neuromorphic computing takes inspiration from our brains. IBM Research. https://research.ibm.com/blog/what-is-neuromorphic-or-brain-inspired-computing