Applications of Neural Networks to Semantic Understanding

In the context of artificial neural networks, semantics refers to the capabilities of networks to understand and represent the meaning of information, specifically how the meaning of words and sentences are discerned. The advance of neural networks in recent decades has had a profound impact on both neuroscience and philosophy dealing with semantic processing and understanding.

In the brain, semantics are processed by networks of neurons in several regions of the brain. The specific neural underpinnings of semantic understanding is a currently evolving area of research, with a variety of hypotheses on the exact mechanisms for semantic processing in the brain being explored at this time. Nevertheless, the brain’s ability to continually learn and refine its semantic understanding has provided an important model for advancements in artificial systems attempting to emulate these features.

In artificial systems, neural networks analyze linguistic data— identifying patterns to realize context and relationships between such data to create representations of meaning. A variety of model types are popular for attempting to encode semantics, including convolutional neural networks (CNN), recurrent neural networks (RNN), and transformer models.

Contents

Mechanisms for semantic representation in the brain

Semantic processing in the brain involves a network of interconnected brain regions that work together to interpret language data and assign semantic meaning. While definite consensus on which regions of the brain are responsible for semantic processing and what their exact mechanism is has not yet been reached, neuroimaging studies have indicated that a distinct set of 7 regions is reliably activated during semantic processing[1]. These include the posterior inferior parietal lobe, middle temporal gyrus, fusiform and parahippocampal gyri, dorsomedial prefrontal cortex, inferior frontal gyrus, ventromedial prefrontal cortex, and posterior cingulate gyrus[2].

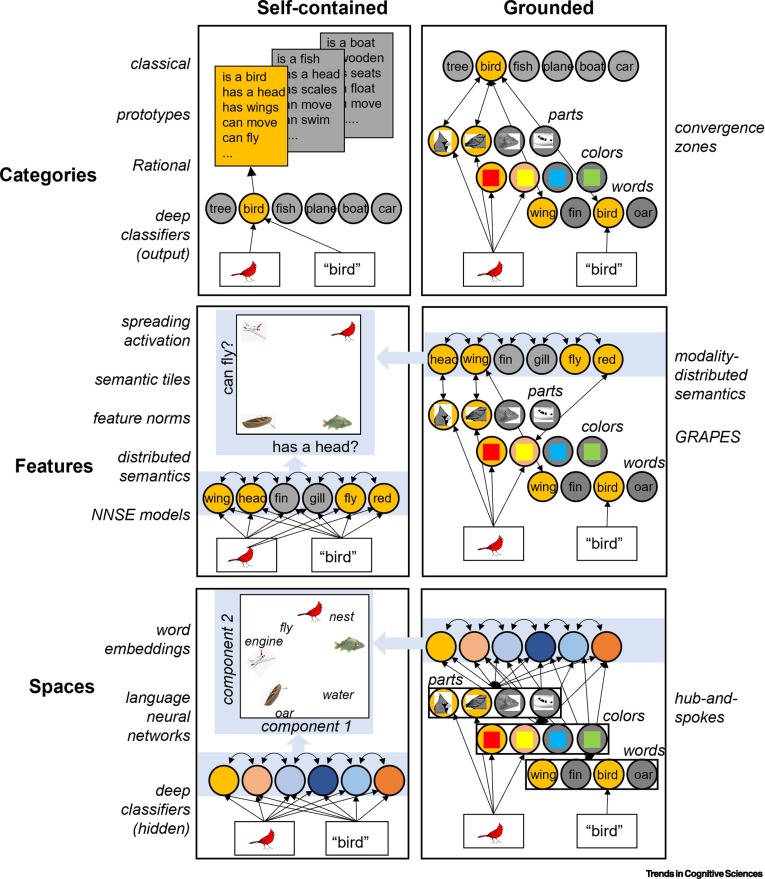

Computational hypotheses about how semantic information is encoded can be grouped into three model types, category-based, feature-based, and vector space representations [3]. In the first type of model, semantic concepts are processed as numerous discrete categories that correspond to a given input— terms with similar concepts are associated together and activate the same regions [4]. The second posits that semantic information is processed as a number of different features, with each perceived component being linked to its associated features— similar concepts are associated with each other based on common properties [5]. These two approaches allow semantic data to be thought of as vectors existing in high dimensional space, with the category-based model encoding data as belonging to distinct categories and the feature-based model encoding specific terms as vectors with high values in the features they are associated with[6]. The last of these proposals models semantic information as also existing in a high dimensional space, but without any interpretable meaning for its corresponding dimensions [7]. Concepts can be understood as similar to each other based on where their vectors are located in the model[8].

Representations of semantics in artificial neural networks

Three of the most popular artificial architectures for understanding semantic information are convolutional neural networks, recurrent neural networks, and transformer models. CNNs process semantic information by applying convolutional filters over semantic data to capture patterns and features of the data, deriving an understanding of semantic relationships in text [9]. RNNs are commonly used to handle sequential data like what is seen with semantic processing. One type of RNN commonly used for semantic processing is Long short-term memory models— these add a mechanism of gates on each cell that allows the model to retain information longer and better understand semantic information over a larger context[10]. Transformer models, introduced in 2017, have become the standard architecture for processing semantic information. These models employ attention mechanisms that allow the model to weigh the relevance of one word or part of a set of semantic information relative to each other. Text is converted to numerical tokens by these models, and then weighted using the attention mechanism to consider the whole semantic context of given information, improving understanding of the relationship between terms.

Semantic encoding in artificial neural networks relies on different techniques to represent and help process semantic meaning. Embedding techniques like word2vec and GloVe are used by models to create vector relationships where words with similar meanings are mapped closer together, allowing for understanding of semantic meaning in situations like similes or analogies [11][12]. For more complex semantic understanding,

Two important implementations of transformer architecture are GPTs and BERT. These frameworks allow for more robust representation of semantic meaning by introducing techniques that allow for contextual embedding— this enables transformer models to represent semantic meaning in a more nuanced fashion [13]. BERT (Bidirectional Encoder Representations from Transformers) was developed in 2018 by Google to provide solutions to common language tasks— this sort of transformer model uses a large amount of data to pertain the model on a variety of tasks, allowing it to understand context better. It uses a bidirectional approach to consider both the words left and right in a given set of text, allowing for a more nuanced understanding of semantic meaning[14]. GPT (Generative Pretrained Transformer) was developed by OpenAI on a similar architecture. GPT processes semantic data in one direction, using a causal approach to predict the next word in a sequence[15].

Despite impressive results from artificial models, there still exist notable limitations and challenges for modeling semantics. Perhaps the most important being the question of what sort of understanding is being generated by artificial networks— while contemporary language models are becoming increasingly adept at representing semantics mathematically and dealing with complex semantic contexts, it is still unclear as to what sort of relationship this has to human semantic understanding. The methods of semantic processing in the brain rely on experience for inputs, which come in a much more complex, multimodal form. The development of multimodal language models may provide a closer link to what is seen in biological systems, but even so true semantic understanding from artificial systems may yet remain elusive.

Comparison of biological and artificial systems

Biological neural systems and artificial neural networks share fundamental principles and mechanisms that form the basis for how they process information, including situations dealing with semantic information. However, despite these similarities, they also exhibit key differences in structure and function. Artificial architectures going back to the first perceptron models were in part inspired by brain structures— however when dealing with encoding of semantic information there are some important differences between biological and artificial systems. Most artificial neural networks focus on synaptic plasticity as the basis for memory, which is of course key for building semantic context [16]. However, emerging theories in neurobiology say that other mechanisms including engram cells play an important role in memory [17]. This indicates that further refinement is possible for biologically influenced artificial networks. One promising application of biological principles to advance semantic representation in artificial systems is the implementation of sleep-like states. During sleep, biological systems promote learning through processes like memory replay, which allows for better integration of new information into existing contexts [18]. The addition of biological principles to artificial systems could allow for the further improvement of semantic processing.

Philosophical implications of artificial models of semantic representation

The ability of neural networks to encode semantics sparks debates about understanding, consciousness, and the nature of mind. While artificial systems like neural networks process semantics through mathematical representations, critics argue that these systems lack true understanding or subjective experience. This perspective is perhaps best exemplified by John Searle’s Chinese Room argument, which suggests that artificial systems manipulate data without genuinely knowing the meaning of their outputs[19]. The general conclusion of such arguments is that language data on its own is not sufficient for semantic understanding. Counter arguments in favor of artificial systems having the capacity for genuine semantic understanding typically focus on the functional capabilities of these systems rather than the experiential component of artificial understanding. One such counter argument takes a functionalist approach— suggesting that semantic understanding should be judged off the ability of a system to produce meaningful responses[20]. Another focuses on the possibility of emergent understanding in future advanced artificial systems. This line of argument emphasizes that as artificial networks grow in complexity, they may develop forms of semantic understanding that are indistinguishable from human understanding.

The ability of neural networks to encode and process semantics challenges foundational concepts in the philosophy of language. It forces a reexamination of what it means to understand, how meaning is constructed, and whether non-biological entities can ever fully participate in linguistic systems.

Conclusion

The exploration of semantics in artificial neural networks has profound implications for both science and philosophy. While artificial systems have made remarkable advancements in representing and processing semantic information, their methods remain fundamentally different from those of biological systems. These differences highlight critical questions about the nature of understanding, the role of experience in meaning-making, and the boundaries between simulation and genuine comprehension. As artificial systems advance, further integration of biological principles may offer a path towards more sophisticated semantic models. However, it remains an open question whether that will reach the same level as the human brain.

References

<references>