Attention and Transformers

(By Aleksa Sotirov)

Transformers are a particular type of deep learning model, characterised most uniquely by their use of attention mechanisms. Attention in machine learning is a technique that involves the differential weighing of the significance of different parts of input data - in essence, mimicking human attention. In particular, transformers are specialised in using self-attention to process sequential data, which makes their main applications in fields like natural language processing (NLP) and computer vision (CV). They are distinct from previous models, including recursive neural networks and long short-term memory models, by their increased parallelisation and efficiency, which is largely due to the utility of attention mechanisms.

Contents

Background

Before transformers, the most frequently used natural language processing models on the market were all based on recursion. This means not only that each word or token was processed in a separate step, but also that the implementation included a series of hidden states, with each state being dependent on the one before it. For example, one of the most basic problems in NLP is that of next-word prediction. A recursive model does a great job of this in some cases, e.g. if the input was "Ruth hit a long fly ___", the algorithm would only have to look at the last two words in order to predict the next word as "ball". However, in a sentence like "Check the battery to find out whether it ran ___", we would have to look eight words back in order to get enough context from the word "battery" to determine that the next word should be "down". This would require an eighth-order model, which would be a ridiculous hardware requirement.

So, this kind of modelling works for shorter sentences, but processing longer strings in this manner requires enormous computational power, making it less than desirable. This is where the introduction of attention really helped, because it made it so that instead of looking N words back, the programme might pick out the most significant relationships between words and only focus on those. State-of-the-art systems like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) all were designed so that, apart from the recursive structure, they also implemented an attention mechanism.

However, in 2017, a team at Google Brain proposed a new model which completely disregarded the recursive element and solely focused on attention. It turns out that the sequential position of the tokens does not affect the learning much, meaning that these systems work just as well as the recursive ones, but take a lot less time and computational power to train, and are thus far more practical. These attention-only systems are known as transformers.

Attention

Dot-product attention

To understand attention, it is helpful to think about the common task of machine translation. When we process the input sentence, we first have to go through a process known as tokenization, which basically means reducing each word of the input to its most basic form. In transformers, this is usually accomplished through byte pair encoding, wherein each repeated pair of characters is replaced with a new character until the algorithm terminates, at which point we've gone from words to tokens. Then, each token in our dictionary has to be embedded into a vector space, so that we can perform mathematical manipulations with it. This is usually done with a pretrained dictionary, such that words with similar meanings will have vector embeddings that are close together.

In the most bare-bones version of attention, we would take this sequence of tokens (which is a matrix) and we'd call it our Key. Then, for each token in the sequence, which we call a Query, we would use the dot product of that token with each token in the Key to get a sequence of scores for that particular Query. From how the embeddings are constructed, each of these scores represents how similar the token in question is to each word in the input sentence, so if we normalize them with a softmax function, what we end up getting is a sequence of probability weights. We can then take the weighted sum of the input sentence (which are called the Values), and our result would be an output for each of the words in the input sentence, which in essence is the same word with more "context". Notice, in this process, we are neither using word order nor shifting any weights, but simply comparing semantic meanings.

Adjustments

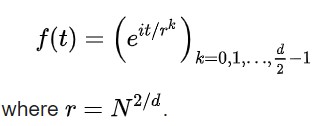

The above model, as mentioned, completely disregards word order. However, sometimes word proximity is very important, which means that we must somehow encode the position of the word in the sentence. To do this, we can have word position perturb the word vectors in a different direction around a circle, which can be represented thusly:

In essence, words which are close together are perturbed in a similar direction, and therefore stay close together, whereas words that are far apart get perturbed in opposite directions.

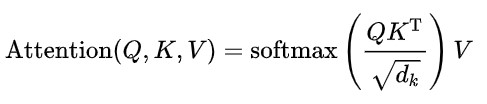

We also notice that there are no weight changes in this mechanism, which leaves little room for optimisation. Therefore, for all three appearances of the input tokens (the Key, Query, and Value vectors), we will have them multiplied by a different weight matrix (Wk, Wk, and Wv respectively), which are all learned separately. For the purposes of learning, the output of this operation needs to be scaled down by a certain factor, in this case the square root of the dimension of the Key vector. So, the mathematical notation for the self-attention mechanism ends up looking something like:

The mechanism described above is called an attention head. It is important to note that a transformer actually uses multi-head attention, meaning that it is composed of multiple attention heads. For example, in a sentence like "The king gave the sword to the servant", the word "gave" might attend to "king", "servant", or "sword", so several attention mechanisms are needed, and their results end up getting concatenated and fed into a feed-forward layer. Research has suggested that these separate attention heads actually seem to pay attention to separate syntactic relationships, i.e. some are specialized for objects of verbs, some for determiners of nouns, and some for pronoun coreference.

Transformers

Architecture

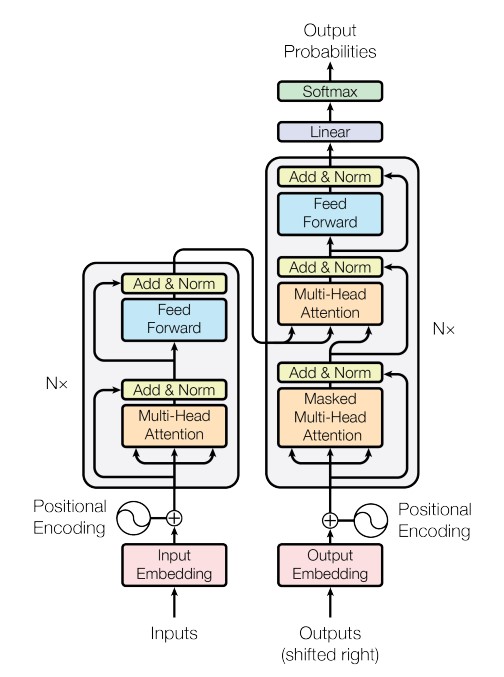

The multi-head attention block stands at the core of the transformer model, but there is a lot more going on within it. A schematic of the model would look something like this:

As we see, the transformer is made up of an encoder and decoder stack, made up respectively of a number of encoding and decoding layers. This is to ensure that, in case one of the layers does a subpar job at predicting the output, it does not impede the result as a whole. The role of the encoder is to add context to the input sequence in order to establish connections between tokens, whereas the decoder's role is to take the encoder's output and use it in order to recreate the same sequence in the target language.

Each layer of the encoder is made up of a multi-head attention block that then feeds into a feed-forward layer, which along with a layer normalisation mechanism, is meant to further process the output and make it "presentable" (i.e. smooth and efficient). We notice that the output of the encoding layer will go into the decoding layer, but during training there is also a sequence from the decoder output that feeds into it as well. To avoid the training turning into a simple copy-and-paste mechanism, this input is shifted back by one word, and it is also affected by something called masking. Namely, through some simple matrix manipulations, we are stopping the decoder from accessing the words that come after the target word in the sentence, which is how you would necessarily have to go about it in the test set.

At the end of the process, the decoder will spit out a certain sequence of values. As before, we will have to put it through a softmax function in order to turn it into a sequence of probabilities instead. Now, we can simply take the word in the output vocabulary with the highest probability, and use it in our translation. We do this for every token, and we have hopefully developed a translation.

Applications

We see that, unlike the recurrent models, the attention-based model of the transformer is incredibly well-suited for paralellisation, as it does not parse the tokens sequentially, and instead looks at relationships between words in the sentence as a whole. Also, unlike the previous models, it is based almost exclusively on a small number of matrix multiplications - a task for which today's GPUs are very optimised. This makes the transformer a cost-effective model for all kinds of sequential data, and especially for natural language processing. Applications have included machine translation, language modeling, sentiment analysis, question answering, and document summarization. Pre-trained language models like BERT, which is the basis of pretty much all of Google's natural language processing tasks, are mostly based on transformer models.

References

[1] Rohrer, B. (October 29, 2021) Transformers from Scratch. [1]

[2] Vaswani et al. (June 12, 2017) Attention Is All You Need. [2]

[3] Alammar, J. (June 27, 2018) The Illustrated Transformer. [3]

[4] Kaiser, L. (October 4, 2017) Attentional Neural Network Models. [4]

[5] Horev, R. (November 10, 2018) BERT Explained: State of the art language model for NLP. Published in Towards Data Science. [5]

[6] Rasa Algorithm Whiteboard. (2020) Transformers & Attention Series. [6]

[7] Wang et al. (February 22, 2019) GLUE: A Multi-Task Benchmark and Analysis Platform for Natural Language Understanding. [7]

[8] Clark et al. (August 1, 2019) What Does BERT Look at? An Analysis of BERT's Attention. [8]