CNNs in Facial Recognition and Bias

By Neha Bhardwaj

Contents

Convolutional Neural Networks

A convolutional neural network, or CNN, is a subcategory of artificial neural networks. In terms of architecture, CNNs are multilayer perceptrons. They are most commonly used for image classification, with the ability to take in an input image and learn to differentiate between the various figures and characteristics within the image. [1] The benefit in using this kind of multilayer perceptron over a normal feed-forward network is that it can perform a much more sophisticated analysis of an image, taking into account spatial and temporal dependencies.

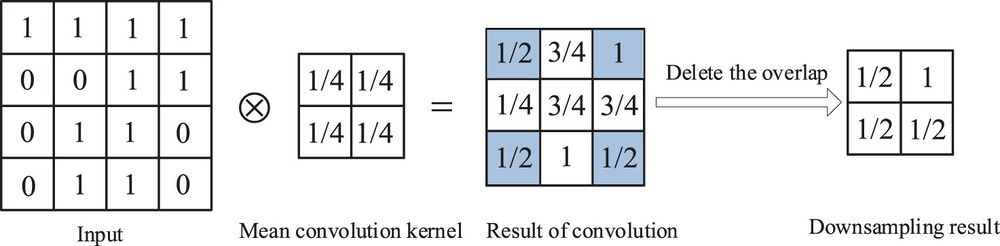

A good CNN must be able to convert images into a form that is easier to process without losing any key information about the image. It does this in the first layer of the network, called the Convolutional Layer, which consists of input data, a filter, and a feature map. [2] If, for example, the model is analyzing a color image, the input data is a three-dimensional matrix of pixels corresponding to RGB values. Typically a CNN uses a Kernel/Filter (K), traversing over all regions of the image to create a convolved feature.

The objective of the Convolutional Operation is to extract high-level information about the image. CNNs with multiple layers enable this process. Traditionally, the first layer captures low-level features like edges and color, while the addition of more layers allow the CNN to analyze higher-level features. The dimensionality of the convolved feature is either smaller, the same, or bigger than the original image depending on whether Valid, Same, or Full Padding is used.

Next, the Pooling Layer reduces dimensionality and extracts dominant features. There are two types of pooling. Max Pooling gets the maximum value in each region the Kernel traverses over, while Average Pooling returns the average of all the values in that region.

Now, we use a Fully Connected Layer (FC Layer) to classify the image. The converted input image is flattened into a column vector and fed into this feed-forward neural network, trained using backpropagation. Over multiple epochs, the model is able to classify images using the Softmax Classification technique.

Use of CNNs for Facial Recognition

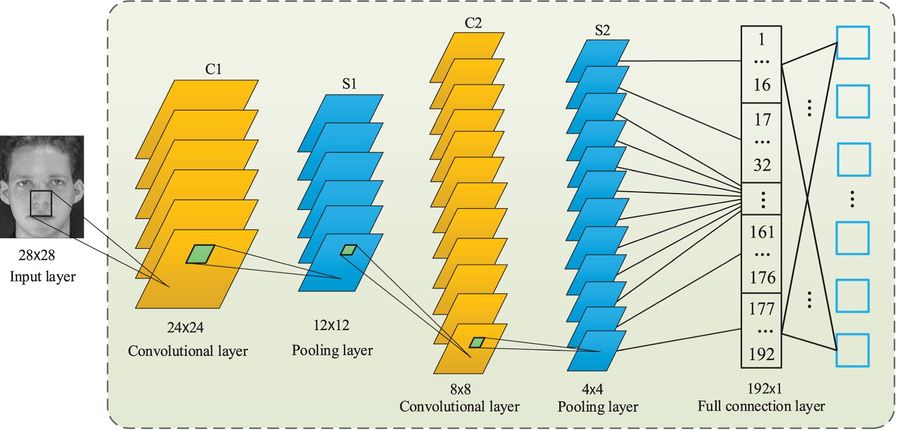

Just a few years ago, the use of CNNs for facial recognition was first proposed. A network was trained on a large database of low resolution images of faces, and it was able to effectively extract facial features and analyze them. [3] One common CNN model for facial recognition is similar to the classical LeNet-5 model, but with a few adjustments. This CNN model has two convolutional layers (C1 and C2) and two pooling layers (S1 and S2), arranged in the form C1-S1-C2-S2.

The input layer only has one feature map. C1 includes 6 feature maps, in which each neuron is convoluted with a 5x5 kernel. S1’s output is 6 feature maps calculated based on input from the previous layer. C2 and S2 have a similar structure and calculation, except they each have 12 feature maps. There is then a fully connected single-layer perceptron before the final output, which is a 40-dimensional vector for the facial recognition of 40 individuals.

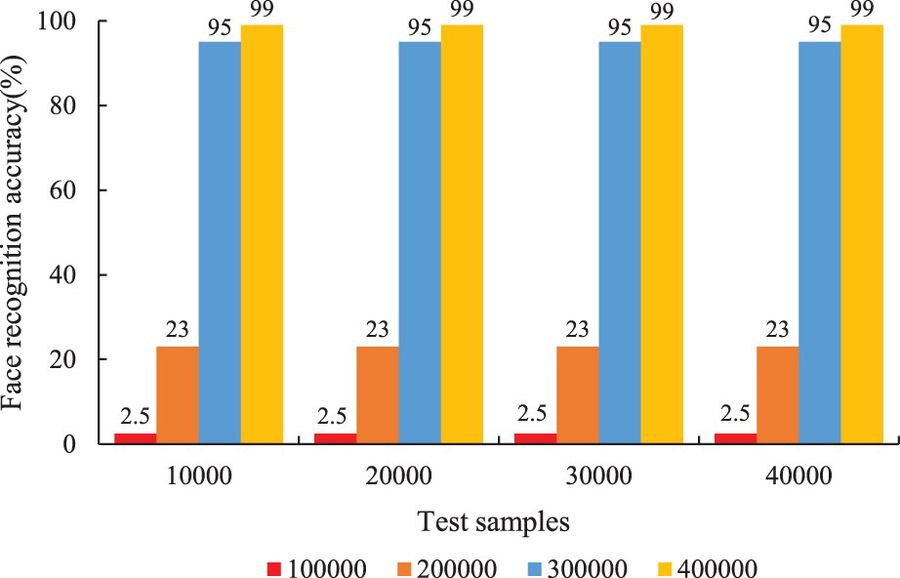

Datasets to train CNNs on need to be very large with multiple variations to improve the ability of the model to recognize faces. The Olivetti Research Laboratory (ORL) face dataset has 400 human face images from only 40 individuals. The dataset was augmented by using four data augmentation methods: horizontal flip, shift, scaling, and rotation. Tests have shown that the face recognition accuracy increases as the number of training samples increases. The augmented dataset introduces abundant sample features, which can enhance the training of the network and result in high face recognition accuracy.

Inaccuracy and Bias in Facial Recognition

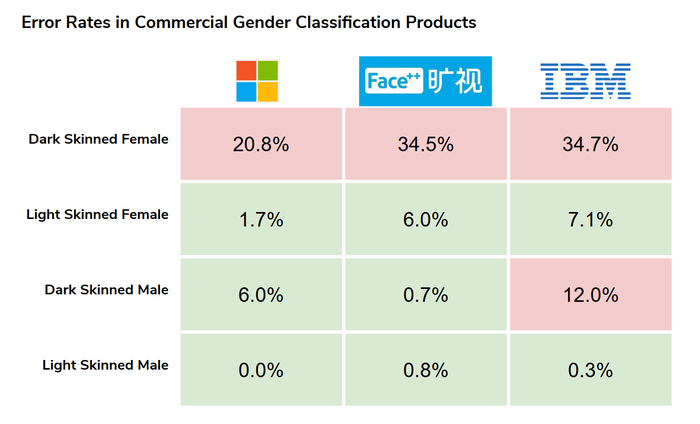

The above section establishes that the greater the variation in the training dataset, the higher the accuracy of the facial recognition model. One of the key issues with facial recognition software is that training datasets are typically lacking in diversity. The underrepresentation of Black, Brown, female, and young subjects in these datasets makes it harder for the algorithms to differentiate between darker-skinned female subjects. For instance, the “Gender Shades” project showed up to 34% higher error rates for darker-skinned female subjects than for lighter-skinned male subjects. [4] A year later, a federal study further showed egregious disparities on the basis of race, gender, and age. For instance, “Asian and African American people were up to 100 times more likely to be misidentified than white men.” [5]

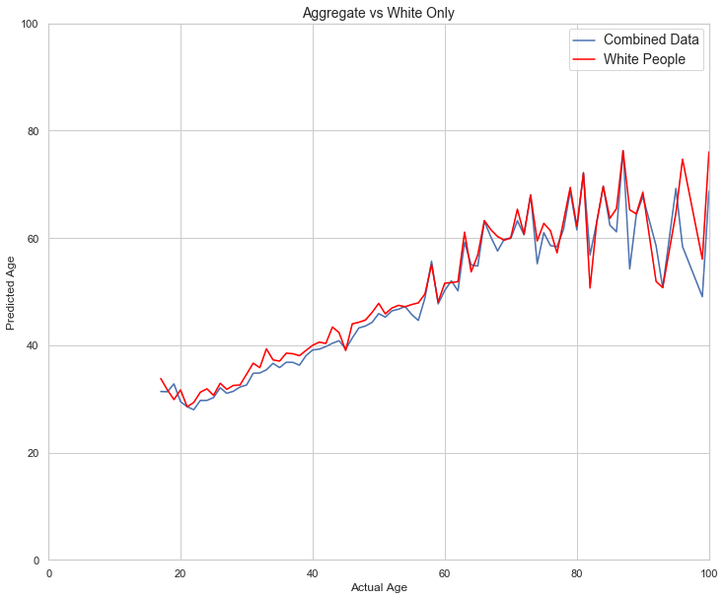

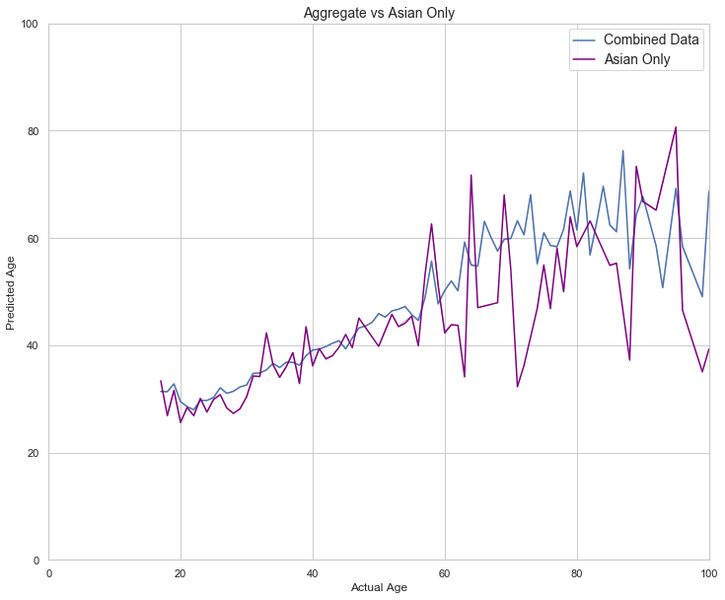

A study was conducted to specifically test whether CNN models for facial recognition also display bias on the basis of race, gender, and age. [6] They employed the VGG-Face architecture, a 16-layer CNN with 13 convolution layers, 2 fully-connected layers, and a softmax output. The model was trained on a massive dataset compiled from Wikipedia, IMDb, and UTKFace, and the goal of the model was to be able to analyze an image and predict the age of the subject. The experiments revealed that the model had immense bias. For instance, because the face dataset had predominantly white subjects, there was clear racial bias in the accuracy of the results.

As the use of facial recognition increases across the globe, so does the need for algorithms to be trained on more representative and comprehensive datasets. Other solutions include establishing standards of image quality for subjects of all skin colors, regular auditing, and new legislation to regulate how mass surveillance is conducted and how law enforcement uses the technology.

References

1.Saha, Sumit. “A Comprehensive Guide to Convolutional Neural Networks.” Towards Data Science, 15 Dec. 2018, https://towardsdatascience.com/a-comprehensive-guide-to-convolutional-neural-networks-the-eli5-way-3bd2b1164a53.

2. IBM Cloud Education. “What Are Convolutional Neural Networks?” IBM, 20 Oct. 2020, https://www.ibm.com/cloud/learn/convolutional-neural-networks.

3. Peng Lu, Baoye Song & Lin Xu (2021) Human face recognition based on convolutional neural network and augmented dataset, Systems Science & Control Engineering, 9:sup2, 29-37, DOI: 10.1080/21642583.2020.1836526.

4. Buolamwini, Joy, and Timnit Gebru. “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification.” Proceedings of Machine Learning Research. http://proceedings.mlr.press/v81/buolamwini18a/buolamwini18a.pdf.

5. Meade, Rachel. “Bias in Machine Learning: How Facial Recognition Models Show Signs of Racism, Sexism and Ageism.” Towards Data Science, 13 Dec. 2019, https://towardsdatascience.com/bias-in-machine-learning-how-facial-recognition-models-show-signs-of-racism-sexism-and-ageism-32549e2c972d.

6. Harwell, Drew. “Federal Study Confirms Racial Bias of Many Facial-Recognition Systems, Casts Doubt on Their Expanding Use.” The Washington Post, WP Company, 21 Dec. 2019, https://www.washingtonpost.com/technology/2019/12/19/federal-study-confirms-racial-bias-many-facial-recognition-systems-casts-doubt-their-expanding-use/.