Cancer Detection Using Convolutional Neural Networks

By Zachary Somma

Cancer is a massive global health problem. It is the second leading cause of death worldwide each year[1] and is one of the largest medical expenses for both individuals diagnosed and researchers looking for a cure[2]. Diagnosis is the first and arguably most important step in treating this issue. That’s because early diagnosis of cancer drastically improves mortality rates and lowers the cost of treatment[3].

Medical imaging techniques[4] are some of the most common ways of diagnosing cancer. Images that are produced by a machine (specific to the test) are analyzed by physicians and other experts to determine if there is a tumor present, and if so then what type it is[5]. Some limitations to this method are human error in analysis, time costs of having people look at the images, and the fact that there isn’t always someone immediately available to make the diagnosis, especially given the shortage of physicians across the U.S. and worldwide[6]. Neural networks, specifically Convolutional Neural Networks (CNNs) offer a potential solution for each of these limitations by automating the diagnostic analysis[7].

Contents

Convolutional Neural Networks

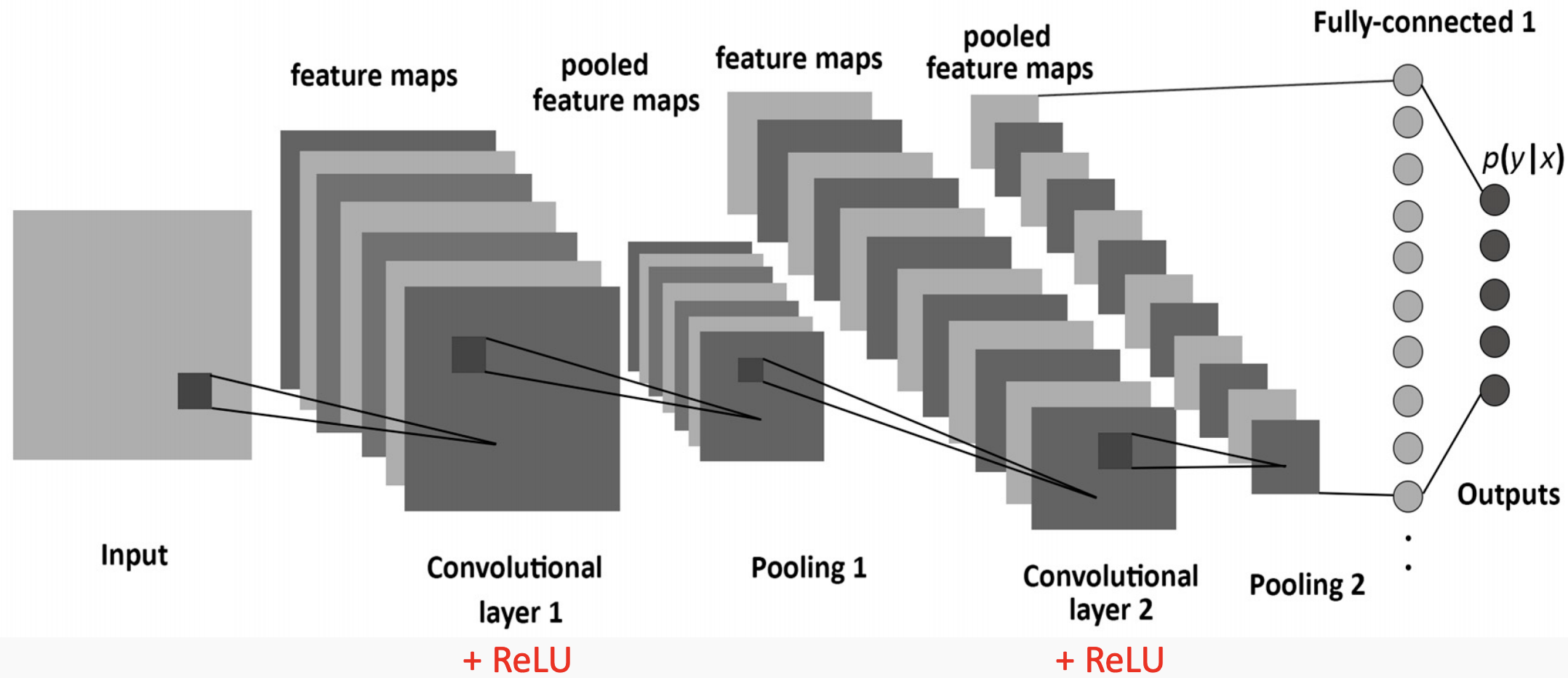

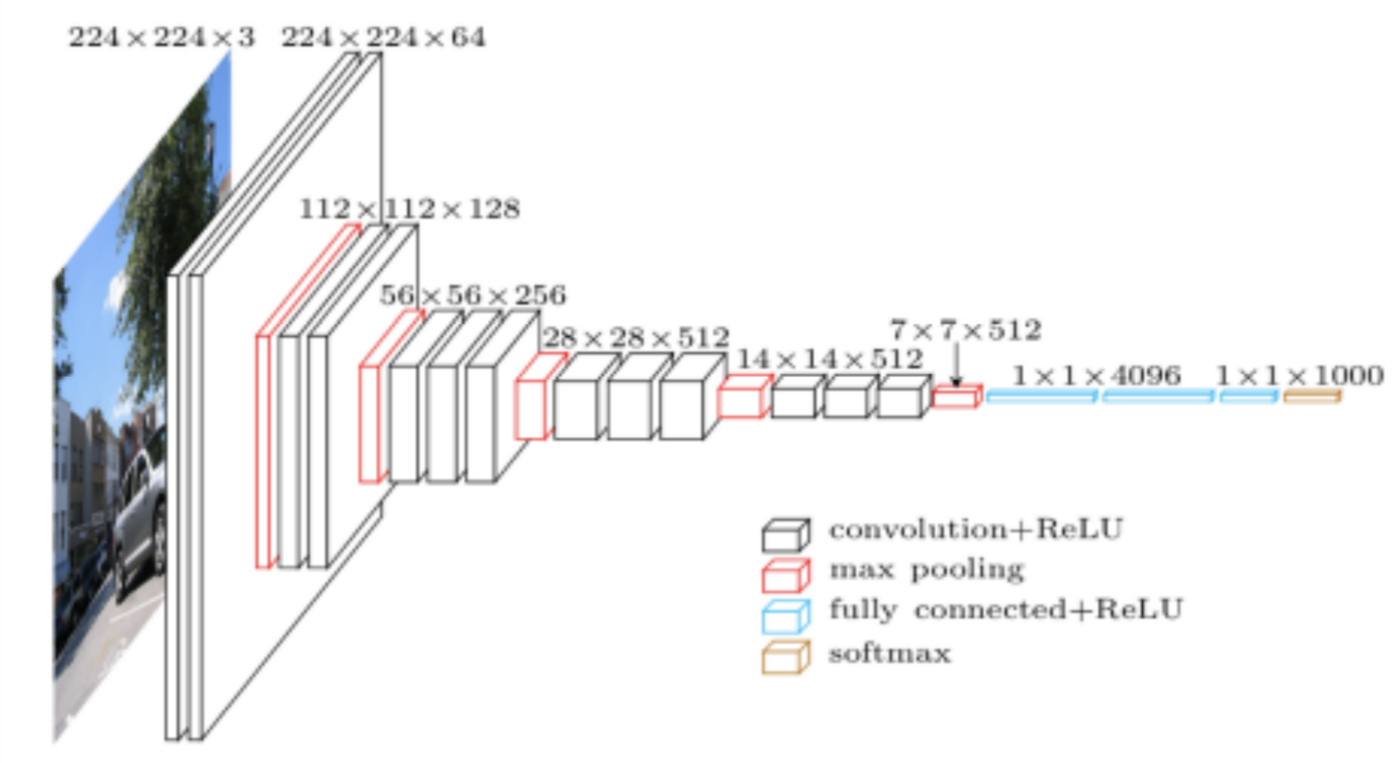

Convolutional Neural Networks are a special type of artificial neural network that are particularly good at categorizing images or grid-like datasets. Their architecture is sparse, topographic, feed-forward, multilayer, supervised, and both linear and non-linear on different layers[8].

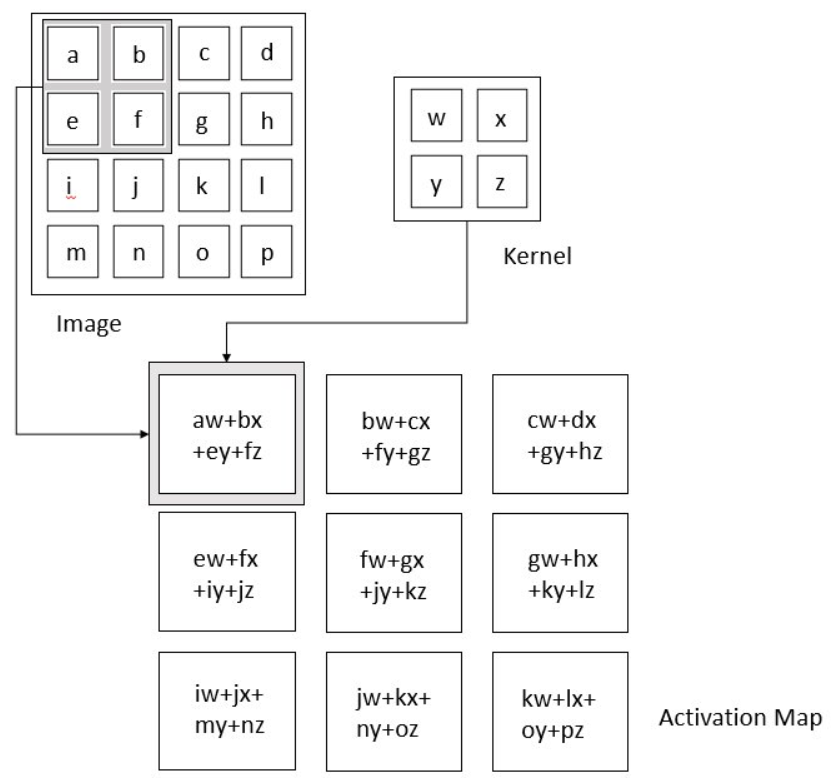

Images in this network first pass through the input layer, where each pixel of an image is given a value based on the darkness of it. These values then are passed onto the first hidden layer, called the convolutional layer. This layer has a filter that uses a matrix of pre-determined dimensions and trained weight values. The dot products are calculated between the filter and all segments of the image with the same dimensionality as the filter. The values on the filter can be trained to identify specific characteristics about a particular image. Then as the filter scans over each segment of the image, the dot product essentially tells us whether or not that section of the image contained the desired feature. This layer of calculations can be repeated for however many characteristics are being filtered for[9].

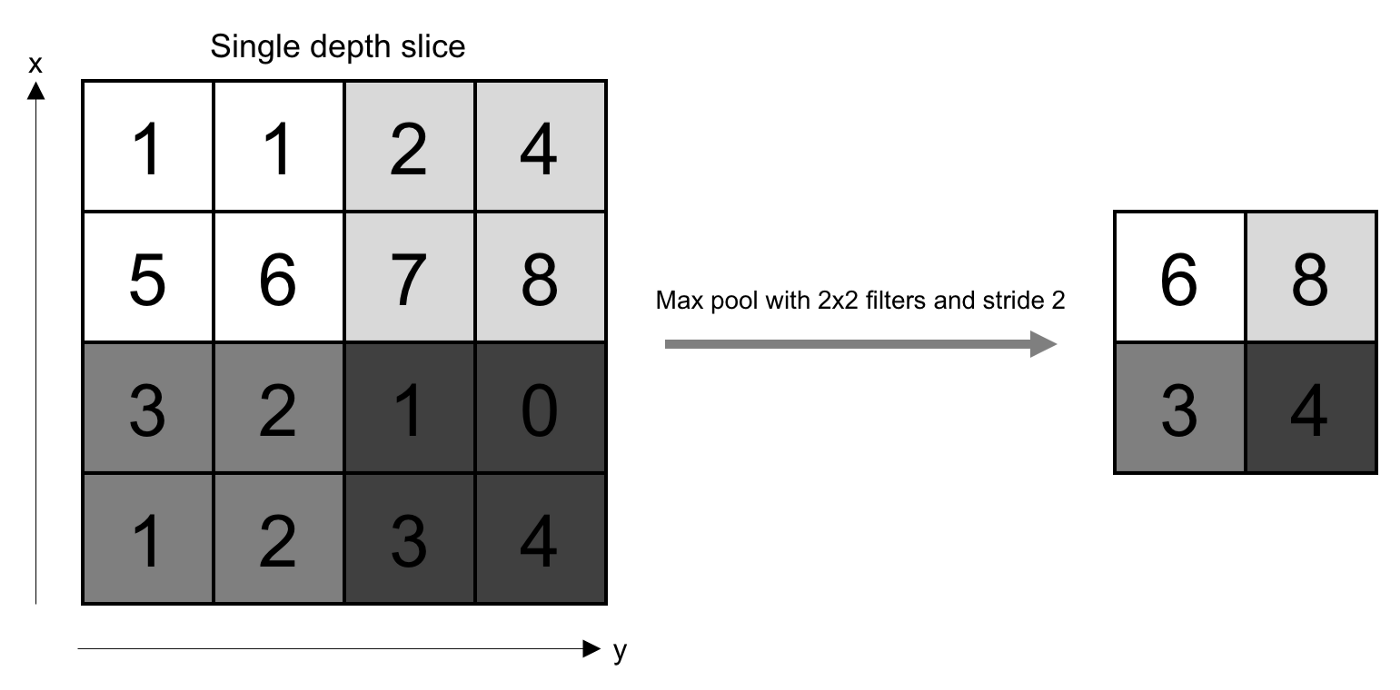

All of the dot product values are then output onto a feature map (sometimes called an activation map), which holds these dot products in the same topography as the original image. The feature map is sent to the next layer, called the pooling layer. Another filter of some specific size is used here, but instead of a dot product calculation, it simply carries over the largest value of each section looked at. The main function of this layer is to reduce the dimensionality of the feature map so that the most important data points are the ones being subject to the later classification[10].

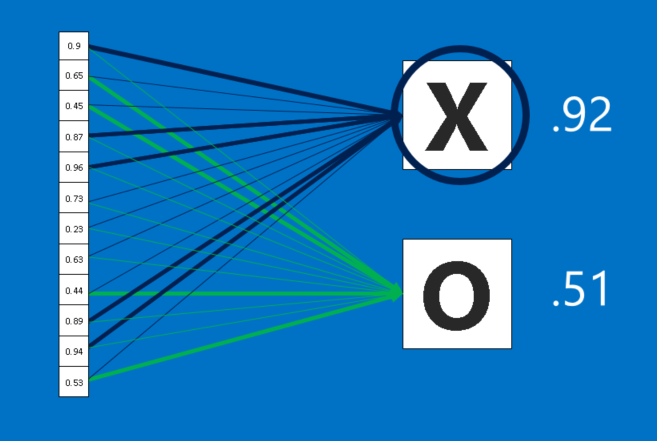

The pooled data is then sent to the third and final hidden layer, which is the fully connected layer. This layer is identical to a perceptron in that it has a matrix of weight values that are combined with the pooled data points in a dense architecture, which identifies how each data point contributes to a certain category. It is as if each of the data points is casting a vote as to which category it believes the image should be a part of. These values are then sent to the output layer, which assigns a category to the input image[11].

Diagnostic Performance

CNNs are tested most frequently on lung, breast, and brain tumors because they are easier to detect in medical images than many other types of cancer, as well as the fact that these are three of the deadliest cancers.

Brain Cancer

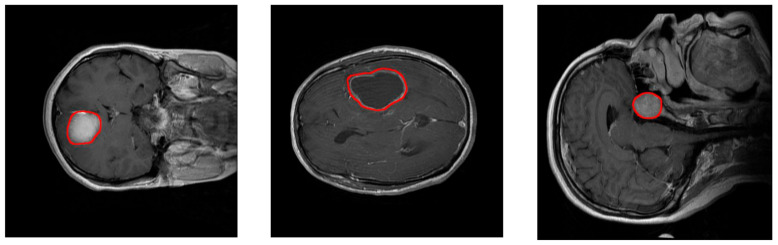

CNNs have shown to be very effective at diagnosing brain cancers using MRI images. In one study, researchers created three separate 16-layer CNNs: Network 1 determines whether a tumor is present, Network 2 determines the type of tumor present, and Network 3 determines the severity grade of the tumor. On a sample size of several thousand images each, Network 1 successfully categorized 99.33% of them, Network 2 had a 92.66% accuracy rate, and Network 3 had a 98.14% accuracy rate[12]. Other networks have been created to try and improve the success rate of network 2. The highest classification accuracy rate achieved was 97.3%. This was done using a multiscale model, consisting of three parallel processing pathways that each use different spatial scales. This modification better extracted textures of each type of tumor[13]. A new type of net is also being used for brain tumor classification, called a capsule network. This uses a very similar algorithm as CNNs, except that they are capable of detecting data transformations and rotations using far fewer training inputs. This is extremely beneficial for tumor detection, as tumors can grow in any location and any orientation in the brain. The highest accuracy results obtained were 86.56% with only one convolutional layer, signifying the possibility for improvement with the addition of more layers[14].

Breast Cancer

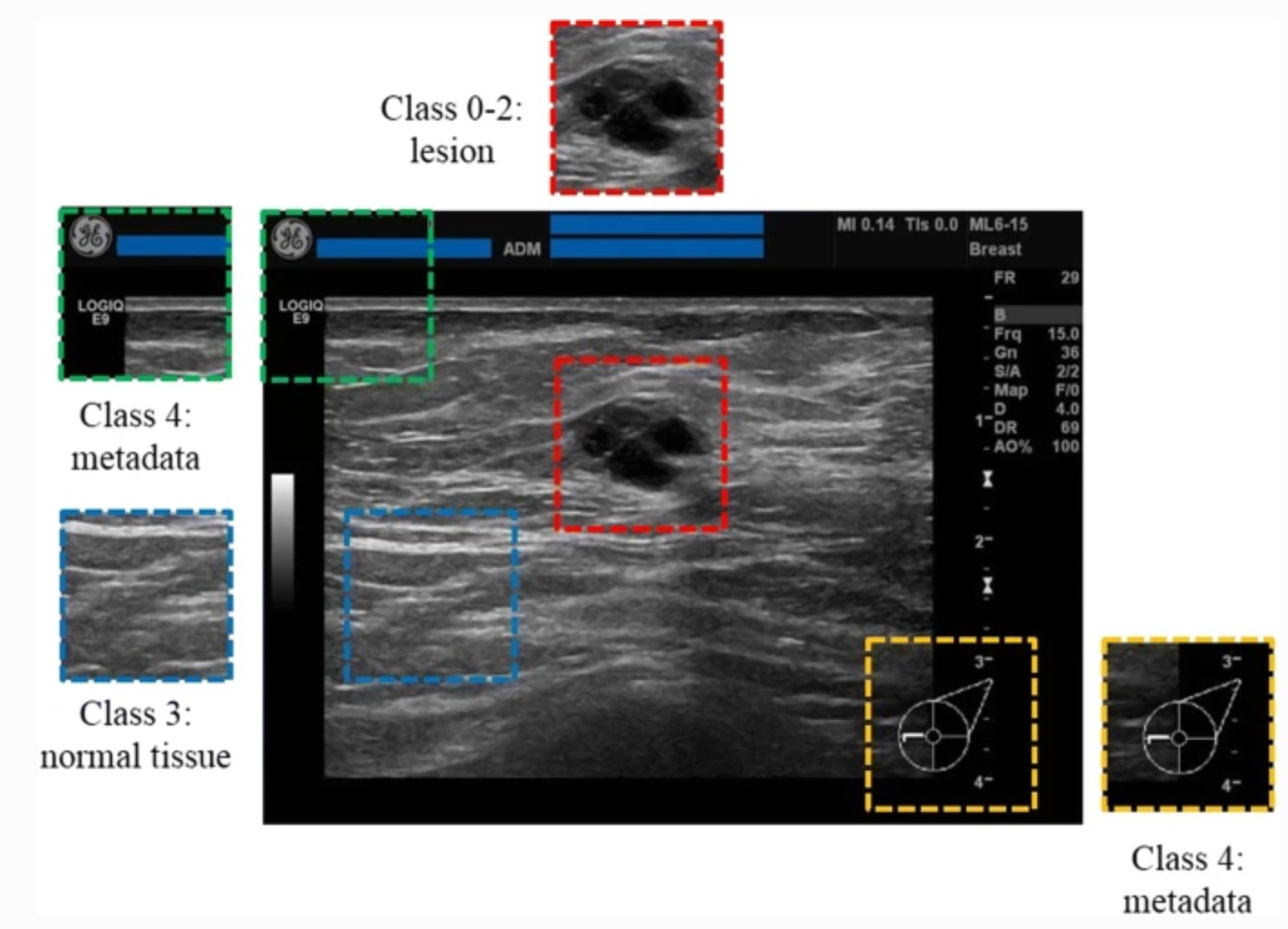

CNNs are currently capable of distinguishing between normal breast tissue, cystic masses, tumors, and other breast lesions visible in ultrasound images at 87.1% accuracy, which is an improvement over expert human readers who logged a 79.2% accuracy on the same dataset[15]. Several networks have also been constructed for mammogram analysis, which can classify masses or tumors, breast density, breast asymmetry, and calcifications. Most all of the networks perform as well as humans, if not better. The dataset used tumors at early enough stages such that their successful detection would increase the patient's chances of survival by about 40% [16].

Lung Cancer

A CNN designed with 12 layers and over 4,000 filters analyzed lung CT scans to classify lung tumors as not present, benign, or malignant. This network saw a 93.55% accuracy rate, with a 95% rate for both sensitivity and selectivity[17]. Another network was designed with a RetinaNet architecture to interpret both X-ray and CT scans. Originally created by Facebook, the RetinaNet architecture organizes features into a feature pyramid rather than a feature map, which layers different precisions of features for a more accurate representation of the image. It also uses focal loss learning algorithm, which adjusts the amount that a filter value is changed based on how influential that value was to the misclassification[18]. This network mirrored expert human performance classifying lung tumors from CTs and X-rays, with 43 true positive classifications, 26 false positives, and 22 false negatives, with only 2 false positives resulting from other foreign bodies, the best result obtained by a CNN for this task[19].

Implementation Concerns

Practical barriers exist that may prevent the implementation of CNNs for tumor diagnosis in the near future. Several factors unrelated to the image being analyzed sometimes impact the results of the network, including the specific type of machine used. For example, x-rays taken on portable machines tend to show more positive diagnoses than stationary x-rays[20]. CNNs also are more likely to produce false negative results on MRI tumor detection if the tumor is small or diffuse[21].

Ethical concerns are also abundant in all neural network-assisted diagnoses. Public trust in artificial intelligence (AI) as a whole is rather low. People are more scared of potential errors made by AI than potential human errors[22]. This is especially true with medical assistance AI such as CNNs. Improvement of public trust has been identified by many as the biggest change necessary for the implementation of medical assistance AI[23]. This fear partially stems from a concept known as automation complacency. This is the tendency of humans to trust the outputs of AI enough that they fail to make necessary checks on their accuracy[24]. One study on assisted electrocardiogram readings demonstrated that neural network assistance improved overall diagnostic accuracy, but that physicians were influenced by incorrect advice for a significant number of cases[25].

An issue that must be addressed with CNNs specifically is how they choose to handle uncertainty and error. Currently, CNNs and physicians share different biases with the handling of error. Physicians are more likely to “err on the side of caution” and give a false positive rather than a false negative. However, CNNs actually are slightly more likely to give false negatives than false positives, which could lead to negative patient outcomes[26].

Gallery

Figure 1. The layers of a standard CNN

Figure 2. Complete architecture of a standard CNN, showing length, width, and depth dimensionality

Figure 3. An animation of the convolutional calculations

Figure 4. Convolution calculations using dot product. The kernel is another term for the filter.

Figure 5. The pooling layer selects the highest value from each section

Figure 6. The fully connected layer performing classifications for each pooled value

Figure 7. MRI images of a meningioma tumor(left), glioma tumor(middle), and pituitary tumor(right)

Figure 8. An example of normal breast tissue and a breast lesion in an Ultrasound image

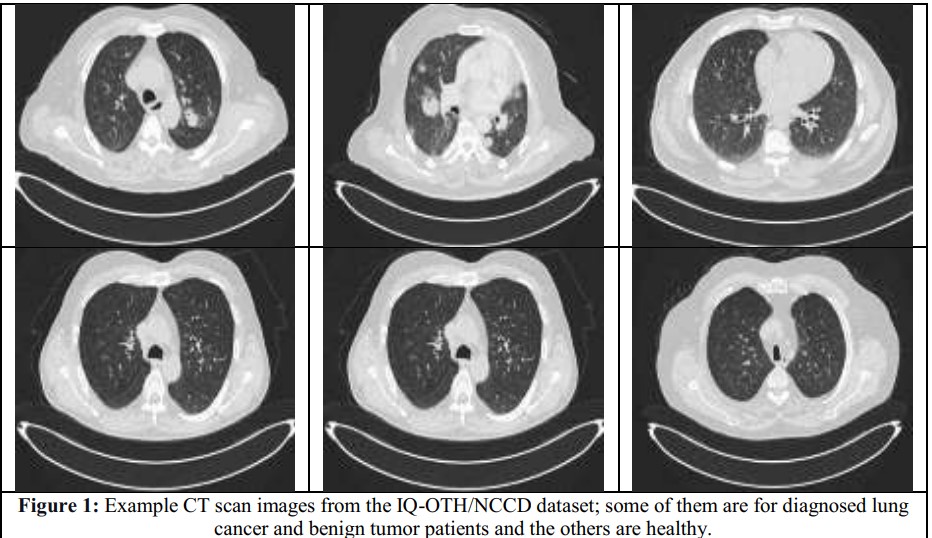

Figure 9. CT images of lungs with and without Tumors

References

Roser, M., & Ritchie, H. (2015). Cancer. Our World in Data. [27]

Levey, N. (2022, July 9). She was already battling cancer. Then she had to fight the bill collectors. NPR. [28]

Robeznieks, A. (2022, April 13). Doctor shortages are here—And they’ll get worse if we don’t act fast. American Medical Association. [29]

Early cancer diagnosis saves lives, cuts treatment costs. (2017, February 3). World Health Organization. [30]

How Cancer Is Diagnosed. (2019, July 17). National Cancer Institute. [31]

Deep Learning in Medical Diagnosis: How AI Saves Lives and Cuts Treatment Costs. (2020, April 23). AltexSoft. [32]

Mishra, M. (2020, September 2). Convolutional Neural Networks, Explained. Medium. [33]

Stewart, M. (2020, July 29). Simple Introduction to Convolutional Neural Networks. Medium. [34]

Rohrer, B. (2016, August 18). How do Convolutional Neural Networks work? [35]

Irmak, E. (2021). Multi-Classification of Brain Tumor MRI Images Using Deep Convolutional Neural Network with Fully Optimized Framework. Iranian Journal of Science and Technology, Transactions of Electrical Engineering, 45(3), 1015–1036. [36]

Díaz-Pernas, F. J., Martínez-Zarzuela, M., Antón-Rodríguez, M., & González-Ortega, D. (2021). A Deep Learning Approach for Brain Tumor Classification and Segmentation Using a Multiscale Convolutional Neural Network. Healthcare, 9(2), 153. [37]

Afshar, P., Mohammadi, A., & Plataniotis, K. N. (2018). Brain Tumor Type Classification via Capsule Networks (arXiv:1802.10200). arXiv. [38]

Ciritsis, A., Rossi, C., Eberhard, M., Marcon, M., Becker, A. S., & Boss, A. (2019). Automatic classification of ultrasound breast lesions using a deep convolutional neural network mimicking human decision-making. European Radiology, 29(10), 5458–5468. [39]

Abdelrahman, L., Al Ghamdi, M., Collado-Mesa, F., & Abdel-Mottaleb, M. (2021). Convolutional neural networks for breast cancer detection in mammography: A survey. Computers in Biology and Medicine, 131, 104248. [40]

Al-Yasriy, H. F., AL-Husieny, M. S., Mohsen, F. Y., Khalil, E. A., & Hassan, Z. S. (2020). Diagnosis of Lung Cancer Based on CT Scans Using CNN. IOP Conference Series: Materials Science and Engineering, 928(2), 022035. [41]

Anwla, P. K. (2020, August 15). RetinaNet Model for object detection explanation. TowardsMachineLearning. [42]

Schultheiss, M., Schober, S. A., Lodde, M., Bodden, J., Aichele, J., Müller-Leisse, C., Renger, B., Pfeiffer, F., & Pfeiffer, D. (2020). A robust convolutional neural network for lung nodule detection in the presence of foreign bodies. Scientific Reports, 10(1), Article 1. [43]

Zech, J. R., Badgeley, M. A., Liu, M., Costa, A. B., Titano, J. J., & Oermann, E. K. (2018). Confounding variables can degrade generalization performance of radiological deep learning models. PLOS Medicine, 15(11), e1002683. [44]

Shimauchi, A., Jansen, S. A., Abe, H., Jaskowiak, N., Schmidt, R. A., & Newstead, G. M. (2010). Breast cancers not detected at MRI: Review of false-negative lesions. AJR. American Journal of Roentgenology, 194(6), 1674–1679. [45]

McKendrick, J. (2021, August 30). Artificial Intelligence’s Biggest Stumbling Block: Trust. Forbes. [46]

Kerasidou, C. (Xaroula), Kerasidou, A., Buscher, M., & Wilkinson, S. (2022). Before and beyond trust: Reliance in medical AI. Journal of Medical Ethics, 48(11), 852–856. [47]

Parasuraman, R., & Manzey, D. H. (2010). Complacency and Bias in Human Use of Automation: An Attentional Integration. Human Factors, 52(3), 381–410. [48]

Tsai, T. L., Fridsma, D. B., & Gatti, G. (2003). Computer decision support as a source of interpretation error: The case of electrocardiograms. Journal of the American Medical Informatics Association: JAMIA, 10(5), 478–483. [49]

Challen, R., Denny, J., Pitt, M., Gompels, L., Edwards, T., & Tsaneva-Atanasova, K. (2019). Artificial intelligence, bias and clinical safety. BMJ Quality & Safety, 28(3), 231–237. [50]