Residual Networks

By Louisa Bay

Contents

Background

Since winning the 2015 ImageNet competition, Residual Networks (ResNets) have become one of the most influential works in the field of computer vision and a crucial network for deep learning. By design, they create systems with significantly more layers, allowing for further complexity in detecting advanced subfeatures in the deepest layers without any harm to performance. For example, in image detection, ResNets may use the initial layers for elementary attributes such as lines and edges before progressively moving up to higher features such as shapes and objects in ways previous networks could not do. This versatile nature which arises from the "skip connection" architecture, has allowed for much success, particularly in object recognition and facial recognition.

Precursor to ResNets

The Deep Convolutional Neural Network (CNN or DCNN) was created to analyze large datasets by having a large number of layers. Much like the later development of ResNets, DCNNs are used primarily for object detection and classification, although they have also been used for natural language processing and financial analysis.

At its most basic level, the DCNN is a three-dimensional network where each dimension processes one of the three primary colors when viewing an image. When the image is fed into the network, the convolutional layer applies a "convolution," an operation that merges two sources of information, such as pixel value. At a more in-depth level, a kernel (a small matrix of weights) moves across the image until there is a single linearly created output pixel (using a dot product) that is the weighted sum of its input features. The pooling layer, a detector of image features such as edges and shadows, follows the previous convolutional layer and is a unique architecture that allowed for advanced and efficient identification software before the ResNets.

ResNets

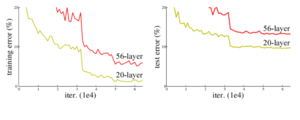

For the most part, DCNNs were highly successful and still are used for a vast number of object detection softwares. However, there were a few issues at its core. Early researchers believed that in order to make a network even more successful, it was crucial to add layers in hopes that each additional layer would pick up novel features. However, in trying this out, they found a degradation problem where supplementary layers saturated accuracy in a way that was not due to overfitting. This finding meant that in empirically tested networks, there was a maximum range of layers that could be applied before error increased. Increasing training accuracy to optimize the network was not as simple as just "going deeper." This roadblock induced by the upper layer limit is the main issue ResNets solved.[1]

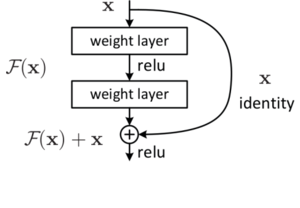

Skip connections were discovered before ResNets but were applied to give deeper layers context to earlier ones and to solve the above issue. As suggested in the name, skip connections feed the output of one layer into the input of future ones by jumping layers. In doing so, the network is given an alternative path, so layers aren't restrained to feeding input to and from their neighbors alone.[2] In having these connections, He et al. was able to greatly improve the depth of their network which also in turn increased accuracy. Their 152 layer ResNet was the deepest network to be used on the ImageNet dataset at the time and far outperformed previous networks. This was true for the other numerous datasets that they tested their work on as well.

A Successful Reset Example in facial recognition: LARNet

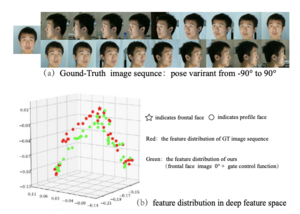

Unlike static image recognition, object recognition across angles requires a system to filter in much more information. With faces in particular, there is a challenge given the vast variability in shapes and sizes across individuals and the massive difference in appearance between the front view, side profile, and all the positions in between. One practical method for facial recognition is pose invariant learning, which occurs by analyzing facial images from different poses. However, this is an incredible challenge for the reasons listed earlier on the variety of expressions and poses between individuals. [3]Yang et. al 2021 proposes a different approach called a Lie Algebraic Residual Network (LARNet), which allows for “pose robust” general face recognition.[4]

Yang et al. recognized that head rotations create a combination of frontal and profile images of a singular face. However, having a rotation matrix is not easily made in convolutional neural networks (a common ANN that can assign value to aspects of visual input to differentiate between images).[5] They were, however, able to create a rotational design using three main architectures. The first was a ResNet-50 structure, which served as the base (The digits following the dash signify the number of layers, 50 in this case. This is the amount found to be the best at balancing booth efficiency and accuracy). Yang et al. l also used a LARNet (a Lie Algebraic Residual Network consisting of a residual subnet to decode the rotational information and a gating subnet that learns the rotational magnitude in the feature learning process. The final architectural component of the network is a LARNet+, an end-to-end mechanism (A type of training in nearly all machine learning programs) for completeness. Overall, the LARNet is highly successful at facial recognition, reaching up to a 98.02% verification rate on one dataset with over 3,425 videos over 1,595 people and a similarly high verification rate on other datasets, outperforming nearly all competitors.

Notes

- ↑ http://neural.vision/blog/article-reviews/deep-learning/he-resnet-2015/>

- ↑ https://towardsdatascience.com/intuition-behind-residual-neural-networks-fa5d2996b2c7#:~:text=This%20is%20called%20Degradation%20Problem,unsurprising)%20and%20then%20degrades%20rapidly.

- ↑ https://hcis-journal.springeropen.com/articles/10.1186/s13673-020-00250-w#:~:text=Abstract,pose%2C%20illumination%20and%20facial%20expression.

- ↑ https://arxiv.org/pdf/2103.08147.pdf

- ↑ https://towardsdatascience.com/a-comprehensive-guide-to-convolutional-neural-networks-the-eli5-way-3bd2b1164a53