Difference between revisions of "Cancer Detection Using Convolutional Neural Networks"

(→Convolutional Neural Networks) |

|||

| Line 3: | Line 3: | ||

== Convolutional Neural Networks == | == Convolutional Neural Networks == | ||

| − | Convolutional Neural Networks are a special type of artificial neural network that are particularly good at categorizing images or grid-like datasets. Their architecture is sparse, topographic, feed-forward, multilayer, supervised, and both linear and non-linear on different layers[[ | + | Convolutional Neural Networks are a special type of artificial neural network that are particularly good at categorizing images or grid-like datasets. Their architecture is sparse, topographic, feed-forward, multilayer, supervised, and both linear and non-linear on different layers[[2]]. |

| − | Images in this network first pass through the input layer, where each pixel of an image is given a value based on the darkness of it. These values then are passed onto the first hidden layer, called the convolutional layer. This layer has a filter that uses a matrix of pre-determined dimensions and trained weight values. The dot products are calculated between the filter and all segments of the image with the same dimensionality as the filter. The values on the filter can be trained to identify specific characteristics about a particular image. Then as the filter scans over each segment of the image, the dot product essentially tells us whether or not that section of the image contained the desired feature. This layer of calculations can be repeated for however many characteristics are being filtered for. | + | Images in this network first pass through the input layer, where each pixel of an image is given a value based on the darkness of it. These values then are passed onto the first hidden layer, called the convolutional layer. This layer has a filter that uses a matrix of pre-determined dimensions and trained weight values. The dot products are calculated between the filter and all segments of the image with the same dimensionality as the filter. The values on the filter can be trained to identify specific characteristics about a particular image. Then as the filter scans over each segment of the image, the dot product essentially tells us whether or not that section of the image contained the desired feature. This layer of calculations can be repeated for however many characteristics are being filtered for[[2]]. |

| − | All of the dot product values are then output onto a feature map (sometimes called an activation map), which holds these dot products in the same topography as the original image. The feature map is sent to the next layer, called the pooling layer. Another filter of some specific size is used here, but instead of a dot product calculation, it simply carries over the largest value of each section looked at. The main function of this layer is to reduce the dimensionality of the feature map so that the most important data points are the ones being subject to the later classification. | + | All of the dot product values are then output onto a feature map (sometimes called an activation map), which holds these dot products in the same topography as the original image. The feature map is sent to the next layer, called the pooling layer. Another filter of some specific size is used here, but instead of a dot product calculation, it simply carries over the largest value of each section looked at. The main function of this layer is to reduce the dimensionality of the feature map so that the most important data points are the ones being subject to the later classification[[3]]. |

| − | The pooled data is then sent to the third and final hidden layer, which is the fully connected layer. This layer is identical to a perceptron in that it has a matrix of weight values that are combined with the pooled data points in a dense architecture, which identifies how each data point contributes to a certain category. It is as if each of the data points is casting a vote as to which category it believes the image should be a part of. These values are then sent to the output layer, which assigns a category to the input image. | + | The pooled data is then sent to the third and final hidden layer, which is the fully connected layer. This layer is identical to a perceptron in that it has a matrix of weight values that are combined with the pooled data points in a dense architecture, which identifies how each data point contributes to a certain category. It is as if each of the data points is casting a vote as to which category it believes the image should be a part of. These values are then sent to the output layer, which assigns a category to the input image[[4]]. |

Revision as of 23:43, 21 October 2022

By Zachary Somma

Convolutional Neural Networks

Convolutional Neural Networks are a special type of artificial neural network that are particularly good at categorizing images or grid-like datasets. Their architecture is sparse, topographic, feed-forward, multilayer, supervised, and both linear and non-linear on different layers2.

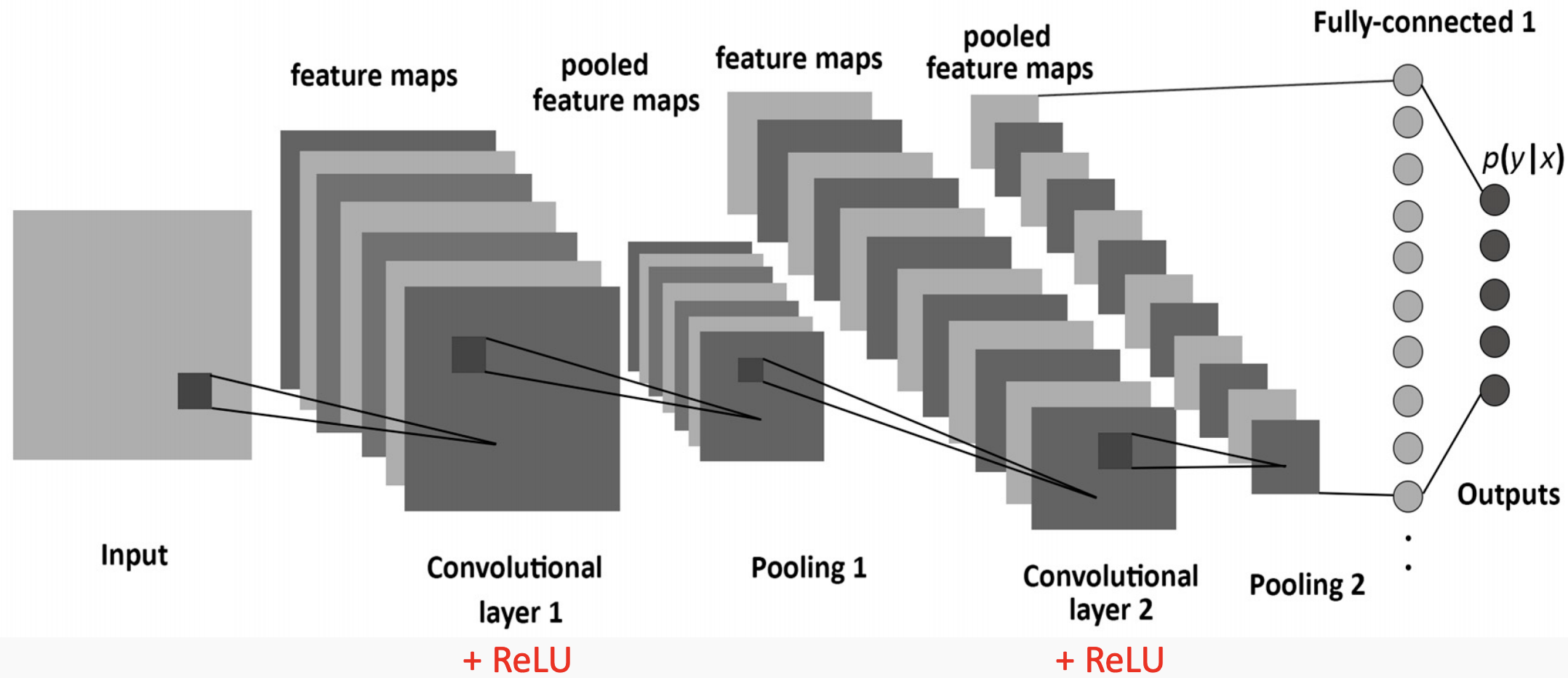

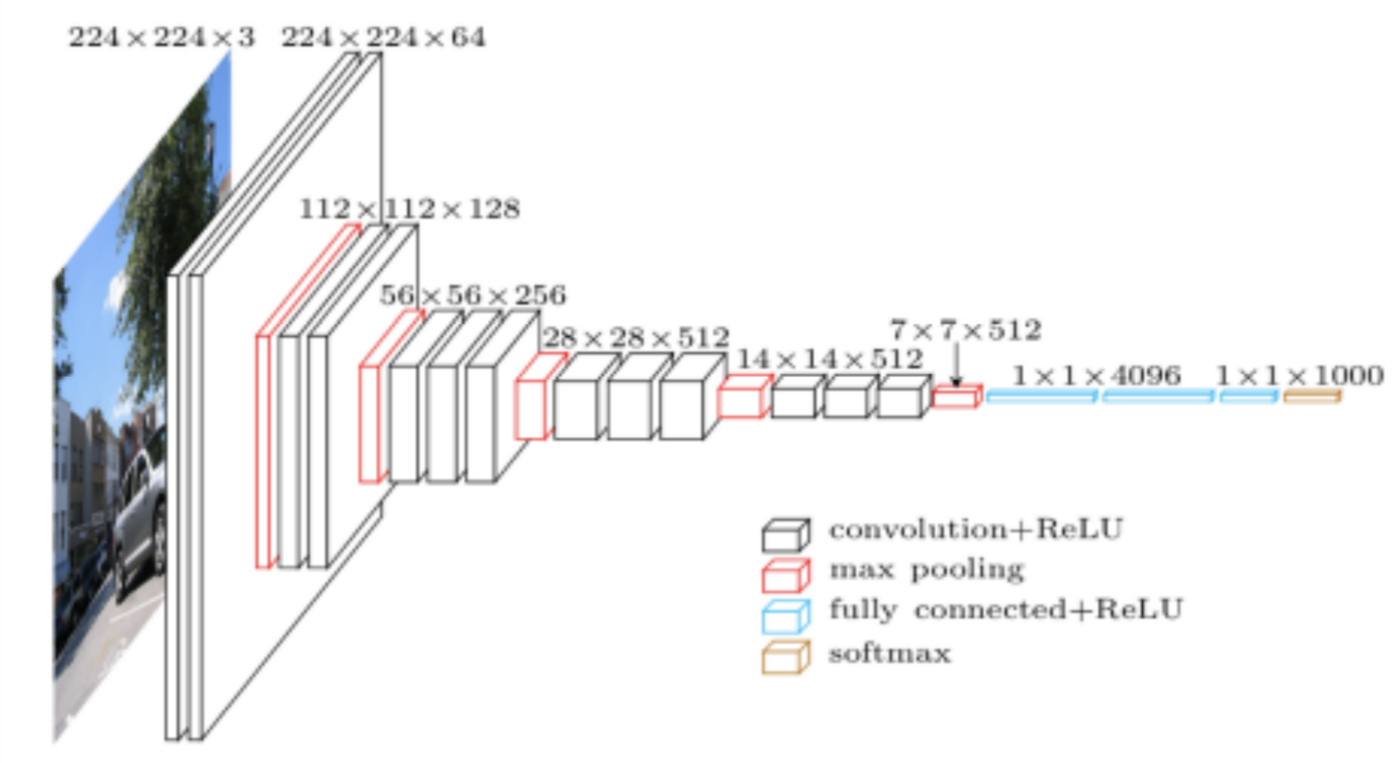

Images in this network first pass through the input layer, where each pixel of an image is given a value based on the darkness of it. These values then are passed onto the first hidden layer, called the convolutional layer. This layer has a filter that uses a matrix of pre-determined dimensions and trained weight values. The dot products are calculated between the filter and all segments of the image with the same dimensionality as the filter. The values on the filter can be trained to identify specific characteristics about a particular image. Then as the filter scans over each segment of the image, the dot product essentially tells us whether or not that section of the image contained the desired feature. This layer of calculations can be repeated for however many characteristics are being filtered for2.

All of the dot product values are then output onto a feature map (sometimes called an activation map), which holds these dot products in the same topography as the original image. The feature map is sent to the next layer, called the pooling layer. Another filter of some specific size is used here, but instead of a dot product calculation, it simply carries over the largest value of each section looked at. The main function of this layer is to reduce the dimensionality of the feature map so that the most important data points are the ones being subject to the later classification3.

The pooled data is then sent to the third and final hidden layer, which is the fully connected layer. This layer is identical to a perceptron in that it has a matrix of weight values that are combined with the pooled data points in a dense architecture, which identifies how each data point contributes to a certain category. It is as if each of the data points is casting a vote as to which category it believes the image should be a part of. These values are then sent to the output layer, which assigns a category to the input image4.

Gallery

Figure 1. The layers of a standard CNN

Figure 1. The layers of a standard CNN

Figure 2. Complete architecture of a standard CNN, showing length, width, and depth dimensionality

Figure 2. Complete architecture of a standard CNN, showing length, width, and depth dimensionality

References

1Roser, M., & Ritchie, H. (2015). Cancer. Our World in Data. [1]

2Mishra, M. (2020, September 2). Convolutional Neural Networks, Explained. Medium. [2]

3Stewart, M. (2020, July 29). Simple Introduction to Convolutional Neural Networks. Medium. [3]

4Rohrer, B. (2016, August 18). How do Convolutional Neural Networks work? [4]